BigQuery Setup Guide

Follow our setup guide to connect your BigQuery data warehouse to Fivetran.

Prerequisites

To connect BigQuery to Fivetran, you need the following:

- A BigQuery account or a Google Apps account

- A Fivetran user account with permissions to create or manage destinations

Fivetran doesn't support BigQuery sandbox accounts.

Setup instructions

Choose your deployment model

Before setting up your destination, decide which deployment model best suits your organization's requirements. This destination supports both SaaS and Hybrid deployment models, offering flexibility to meet diverse compliance and data governance needs.

See our Deployment Models documentation to understand the use cases of each model and choose the model that aligns with your security and operational requirements.

You must have an Enterprise or Business Critical plan to use the Hybrid Deployment model.

Find Project ID

You need to grant Fivetran access to your BigQuery cluster so we can create and manage tables for your data, and periodically load data into those tables.

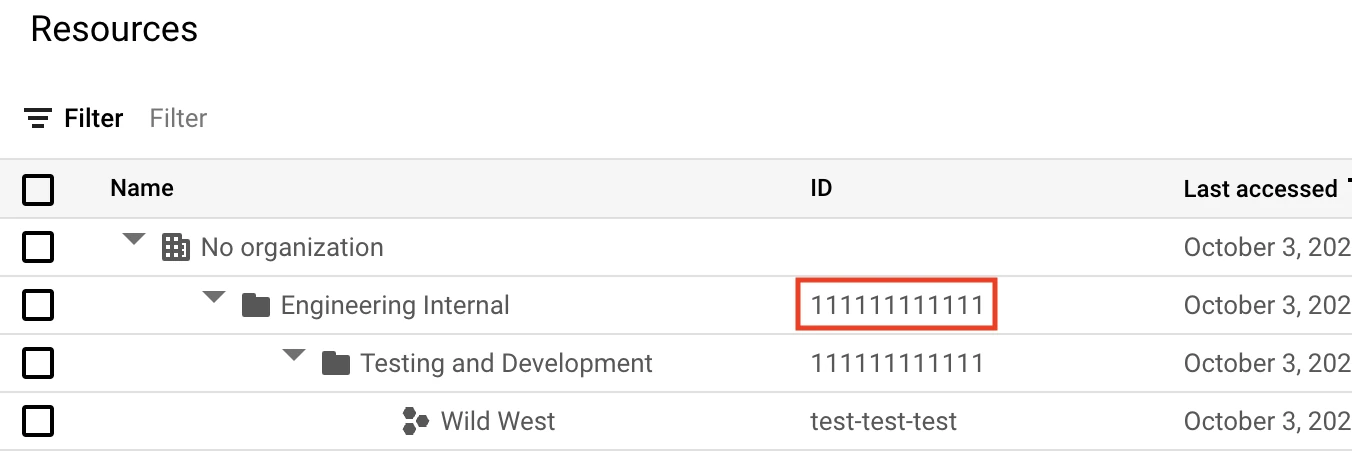

Go to your Google Cloud Console's projects list.

Find your project on the list and make a note of the project ID in the ID column. You will need it to configure Fivetran.

Find Fivetran service account

Log in to your Fivetran account.

Go to the Destinations page, and then click Add destination.

In the pop-up window, enter a Destination name of your choice.

Click Add.

Select BigQuery as the destination type.

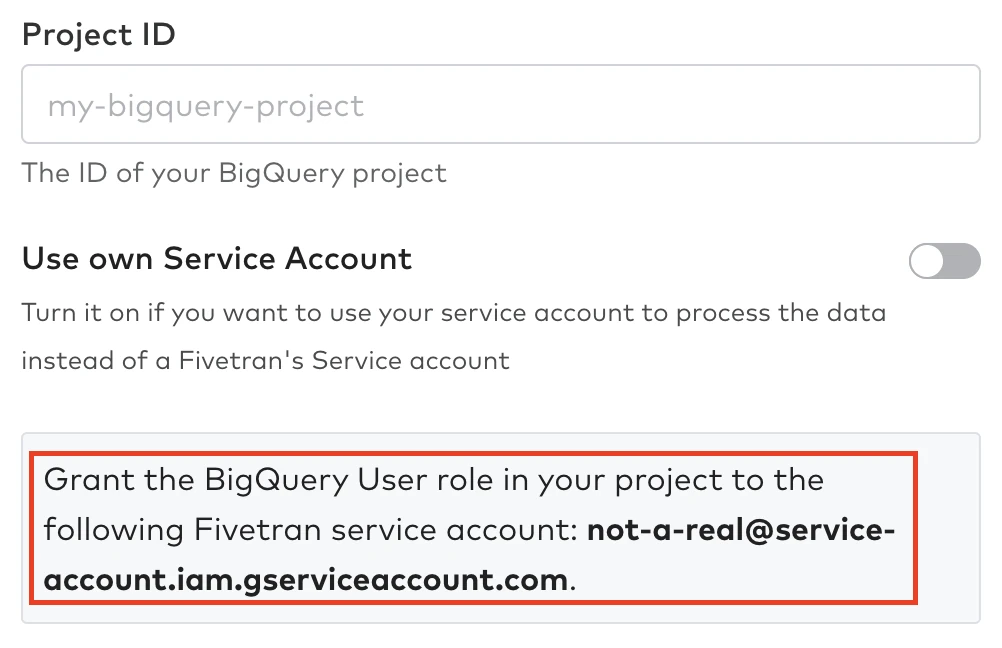

Enter the Project ID you found in Step 2.

Make a note of the Fivetran service account. You will need to grant it permissions in BigQuery.

(Optional) Create service account

This is a mandatory step for the Hybrid Deployment model. For SaaS Deployment model, this is an optional step and you must perform it only if you want to use your own service account, instead of the Fivetran-managed service account, to control access to BigQuery.

Expand for instructions

Create a service account by following the instructions in Google Cloud's documentation.

Create a private key for your service account by following the instructions in Google Cloud's documentation. The private key must be in the JSON format. Make a note of all the contents of the JSON file. You will need it to configure Fivetran.

Example of private key:

{ "type": "service_account", "project_id": "random-project-12345", "private_key_id": "abcdefg", "private_key": "*****", "client_email": "name@project.iam.gserviceaccount.com", "client_id": "12345678", "auth_uri": "https://accounts.google.com/o/oauth2/auth", "token_uri": "https://oauth2.googleapis.com/token", "auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs", "client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/name%40project.iam.gserviceaccount.com" }

Configure service account

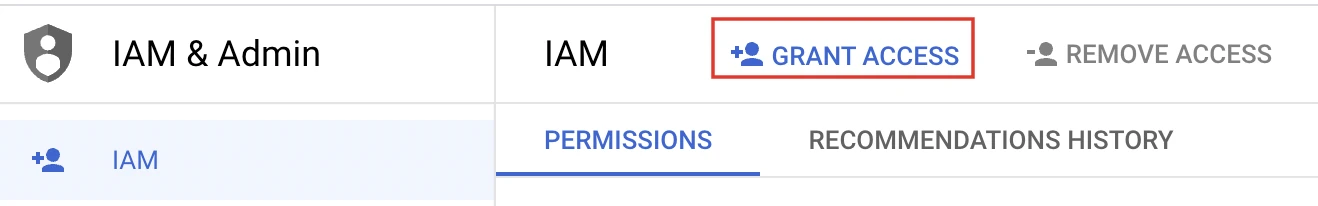

Go back to the IAM & admin tab, and go to the project principals list.

Select + GRANT ACCESS.

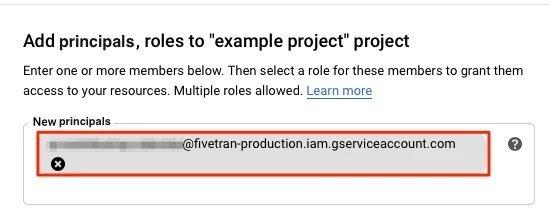

In the New Principals field, enter the Fivetran service account you found in Step 3 or the service account you created in Step 4. The service account is the entire email address.

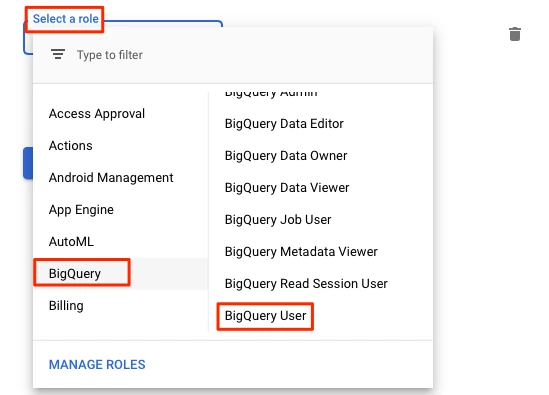

Click Select a role > BigQuery > BigQuery User.

For more information about roles, see our documentation.

(Optional) VPC service perimeter configuration

You must set up a GCP bucket to ingest data from Fivetran in either of the following scenarios:

- You want to use the Hybrid Deployment model

- You use a service perimeter to control access to BigQuery

The bucket must be present in the same location as the dataset location.

Assign permissions to service account

You must give the service account (in the setup form) Storage Object Admin permission for the bucket, so that it can read and write the data from the bucket.

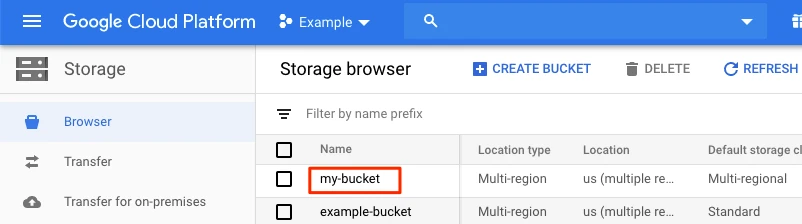

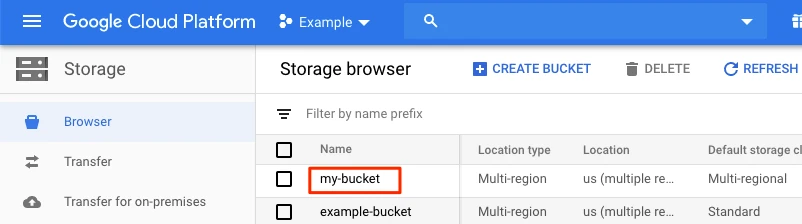

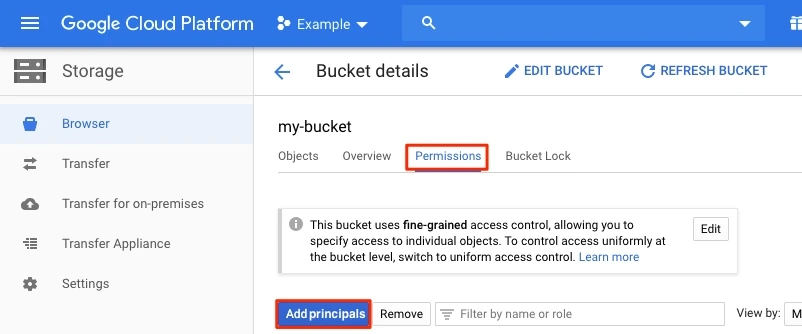

In your Google Cloud Console, go Storage > Browser to see the list of buckets in your current project.

Select the bucket you want to use.

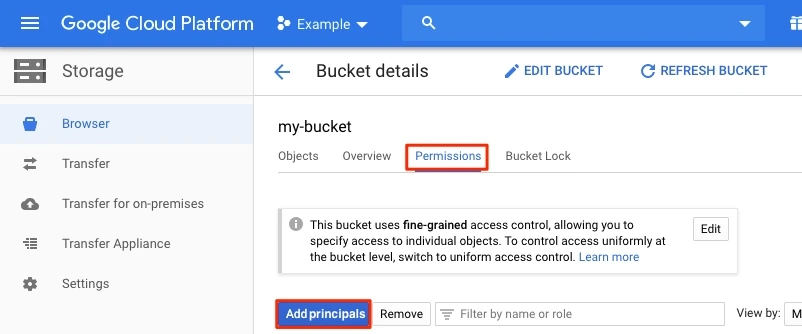

Go to Permissions and then click Add Principals.

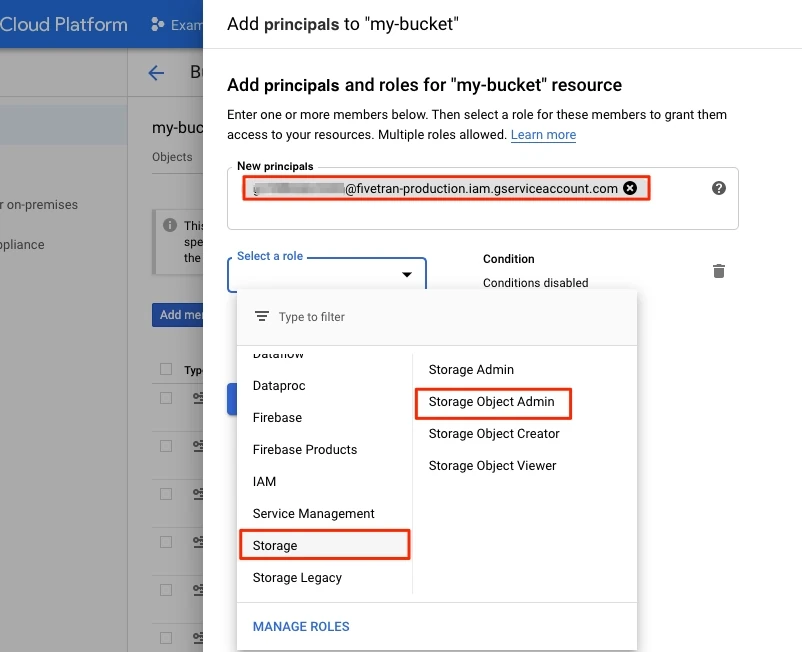

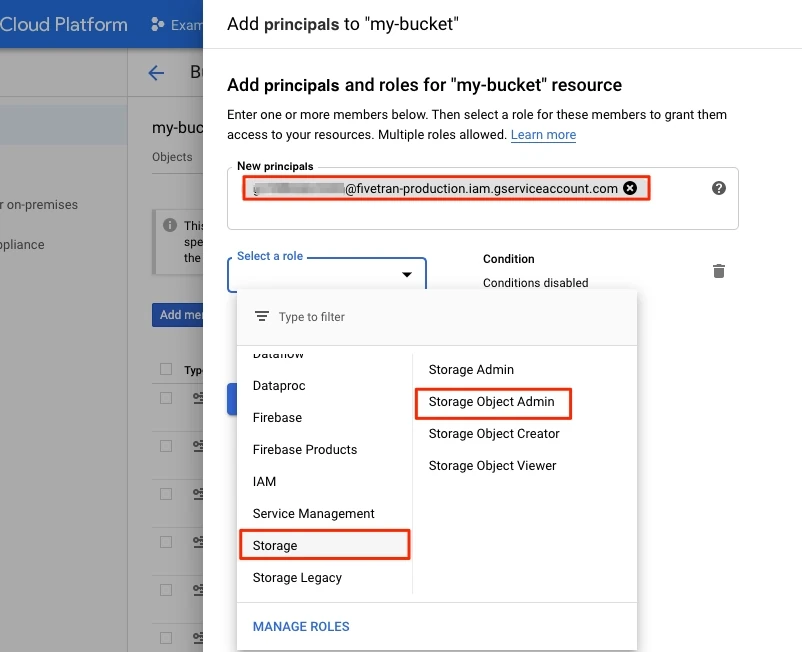

In the Add principals window, enter the Fivetran service account you found in Step 3 or the service account you created in Step 4.

From the Select a role drop-down, select Storage Object Admin.

Set the life cycle of objects in the bucket

You must set a lifecycle rule so that data older than one day is deleted from your bucket.

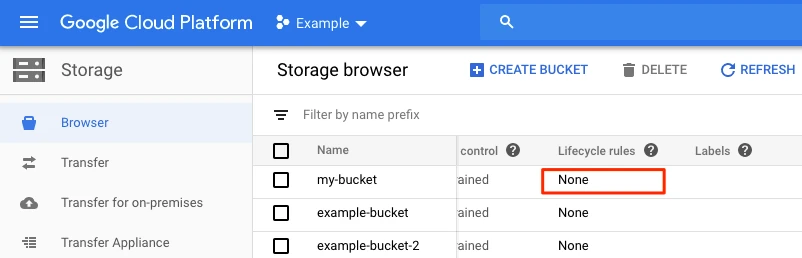

In your Google Cloud Console, go Storage > Browser to see the list of buckets in your current project.

In the list, find the bucket you are using for Fivetran, and in the Lifecycle rules column, select its rules.

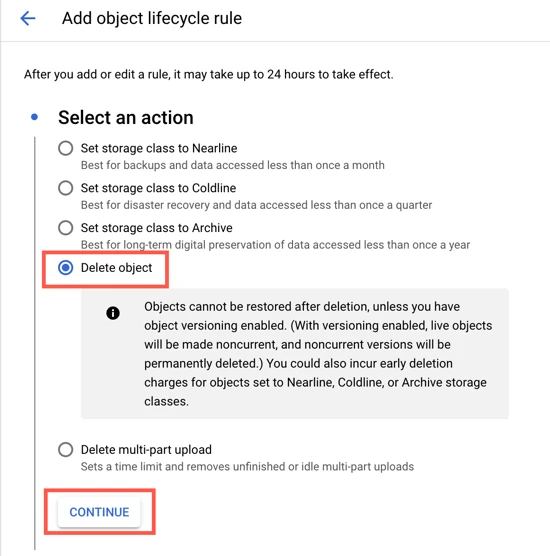

Click ADD A RULE. A detail view will open.

In the Select an action section, select Delete object.

Click CONTINUE.

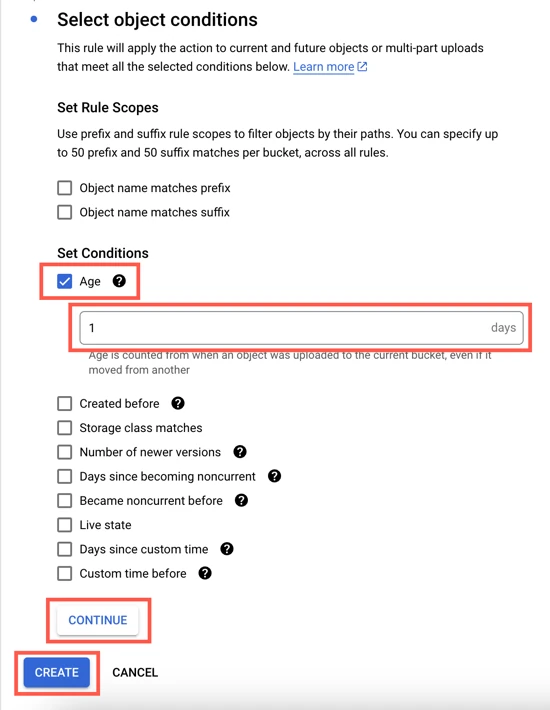

In the Select object conditions section, select the Age checkbox and then enter 1.

Click CONTINUE and then click CREATE.

(Optional) Configure unstructured files

- Choose the GCS bucket where you want to store your files.

- (Optional) Choose a folder within the GCS bucket to store your files. If you do not select any folder, we will store your files directly within the GCS bucket by default.

Assign permissions to service account for file storage

To read and write the data from the GCS bucket, you must provide Storage Object Admin permission for the GCS file bucket to the service account.

In your Google Cloud Console, go to Storage > Browser and find the list of buckets in your project.

Select the bucket you want to use.

Select Permissions and click Add principals.

In the New principals field, enter the Fivetran service account you found or the service account you created.

In the Select a role drop-down menu, select Storage > Storage Object Admin.

Click Save.

Complete Fivetran configuration

Log in to your Fivetran account.

Go to the Destinations page and click Add destination.

Enter a Destination name of your choice and then click Add.

Select BigQuery as the destination type.

In the destination setup form, enter the Project ID you found in Step 2.

(Enterprise and Business Critical accounts only) Select the deployment model of your choice:

- SaaS Deployment

- Hybrid Deployment

If you selected Hybrid Deployment, do the following:

i. Click Select Hybrid Deployment Agent, select the agent you want to use, and click Use Agent. If you want to create a new agent, click Create new agent and follow the setup instructions specific to your container platform.

ii. In the Service Account Private Key field, paste all the contents of the private key JSON file you created.

iii. Enter the Customer Bucket name you configured.

(Optional for SaaS Deployment and not applicable to Hybrid Deployment) If you want to use your service account, do the following:

i. Set the Use own Service Account toggle to ON.

ii. In the Service Account Private Key field, paste all the contents of the private key JSON file that you created in Step 4.

Enter the Data Location.

(Optional for SaaS Deployment and not applicable to Hybrid Deployment) If you want to use your GCS bucket to process the data instead of a Fivetran-managed bucket, set the Use GCP Service Parameter toggle to ON, and then enter the Customer Bucket name you configured in Step 6.

The Use GCP Service Parameter toggle does not appear for the Hybrid Deployment model.

(Optional) To sync unstructured files, set the Enable unstructured file replication toggle to ON.

i. In the GCS bucket for unstructured files field, enter the name of your GCS bucket.

ii. (Optional) In the GCS folder for unstructured files field, enter the name of the folder where you want to store your files.

Choose the Data processing location. Depending on the plan you are on and your selected cloud service provider, you may also need to choose a Cloud service provider and cloud region as described in our Destinations documentation.

To use a Private Google Access connection, choose GCP as the Cloud service provider.

Choose your Time zone.

(Optional for Business Critical accounts and not applicable to Hybrid Deployment) To enable regional failover, set the Use Failover toggle to ON, and then select your Failover Location and Failover Region. Make a note of the IP addresses of the secondary region and safelist these addresses in your firewall.

(Not applicable to Hybrid Deployment) Copy the Fivetran's IP addresses (or CIDR) that you must safelist in your firewall.

Click Save & Test.

Fivetran tests and validates the BigQuery connection. On successful completion of the setup tests, you can sync your data to the BigQuery destination using Fivetran.

In addition, Fivetran automatically configures a Fivetran Platform Connector to transfer the connection logs and account metadata to a schema in this destination. The Fivetran Platform Connector enables you to monitor your connections, track your usage, and audit changes. The connector sends all these details at the destination level.

If you are an Account Administrator, you can manually add the Fivetran Platform Connector on an account level so that it syncs all the metadata and logs for all the destinations in your account to a single destination. If an account-level Fivetran Platform Connector is already configured in a destination in your Fivetran account, then we don't add destination-level Fivetran Platform Connectors to the new destinations you create.

Setup tests

Fivetran performs the following BigQuery connection tests:

The Connection test checks if we can connect to your BigQuery data warehouse and retrieve a list of the datasets.

The Check Permissions test validates if we have the required permissions on your data warehouse. The test also checks if billing has been enabled on your account and is not a sandbox account. As part of the test we:

- create a dataset to check if we have

bigquery.datasets.createpermissions on your data warehouse. - create a table in the dataset (

bigquery.tables.createpermissions) and insert a row in the table (bigquery.tables.updateDatapermissions) to check if billing has been enabled. - create a job to check if we have

bigquery.jobs.createpermissions.

- create a dataset to check if we have

The Bucket Configuration test verifies if we have the

Storage Object Adminpermission on your data bucket if you are using your own data bucket to process the data. The test also checks if the bucket is located in the same dataset. We skip this test if you are using a Fivetran-managed bucket.The File Bucket Configuration test verifies if we have the Storage Object Admin permission on the GCS bucket you specified to store unstructured files. We perform this test only if you set the Enable Storing Unstructured Files toggle to ON.

The tests may take a couple of minutes to finish running.