Release Notes

July 2025

We have added support for the JSON data type. Previously, we converted the data type of JSON columns to STRING when writing them to your BigQuery destination.

June 2025

We have released the beta version of our Unstructured File Replication feature. The feature enables you to sync unstructured files, regardless of the file format, to the supported destinations with integrated object storage capabilities. You can manage and store both unstructured and structured data from a unified storage location.

The June 2025 beta release is free. We have designed the free beta program to allow you to test and validate your Artificial Intelligence (AI) and Retrieval-Augmented Generation (RAG) use cases at scale before the feature's general availability. We will convert the usage insights from the no-cost beta period into a transparent, value‑aligned pricing model.

December 2024

We now support the following BigQuery dataset locations:

| Region | Code |

|---|---|

| Columbus, Ohio | us-east5 |

| Dallas | us-south1 |

| Santiago | southamerica-west1 |

| Toronto | northamerica-northeast2 |

| Madrid | europe-southwest1 |

| Milan | europe-west8 |

| Paris | europe-west9 |

| Berlin | europe-west10 |

| Turin | europe-west12 |

| Doha | me-central1 |

| Dammam | me-central2 |

| Tel Aviv | me-west1 |

| Johannesburg | africa-south1 |

January 2023

You no longer have to set the access control of your GCP bucket to Fine-grained. Previously, when configuring the destination, if you opted to configure a VPC service perimeter, we required you to set the access control for the bucket to Fine-grained.

November 2022

BigQuery restricts us from dropping multiple columns of a table. We have implemented a temporary workaround and can now drop multiple columns from a BigQuery table.

We recreate a table if we face a reserved column name failure during a DROP COLUMN operation. We drop a table column using the following steps:

- Create a copy of the original table.

- Rename the copy table to the original table.

- Drop the original table.

- Drop the column from the copy table.

October 2022

We no longer support RECORD data type. We now convert the RECORD data type columns to STRING in your BigQuery destination.

January 2022

We now truncate NUMERIC and BIGNUMERIC precision column values above the BigQuery supported limit to the maximum allowed limit. Previously, if we found NUMERIC or BIGNUMERIC values above the BigQuery limit, we converted the values to DOUBLE.

| DataType | Precision Limit | Scale Limit |

|---|---|---|

| NUMERIC | 38 | 9 |

| BIGNUMERIC | 76 | 38 |

October 2021

We have improved our JSON flattening feature. We now apply our standard naming conventions to the JSON child column names. For example, we rename source columns such as 3h and ABC Column as _3_h and abc_column, respectively, in your destination. Previously, we didn't use the standard naming conventions for the JSON child column names.

We have implemented this change to reduce write failures on your BigQuery destination caused due to unsupported JSON child columns names.

After renaming the columns, if we detect a conflict in the column names, we display a Task alert on your dashboard. Rename the columns present in the JSON object to prevent data integrity issues.

August 2021

We have added support for the BIGNUMERIC data type to store decimal types. For connections created after August 3, 2021, we convert BIGDECIMAL data type to BIGNUMERIC data type in your BigQuery destination. We are gradually rolling out this change to all existing connections.

You can now opt to use an external service account to load data into your BigQuery destination. Set the Use own Service Account toggle to ON. For more information, see our setup instructions.

July 2021

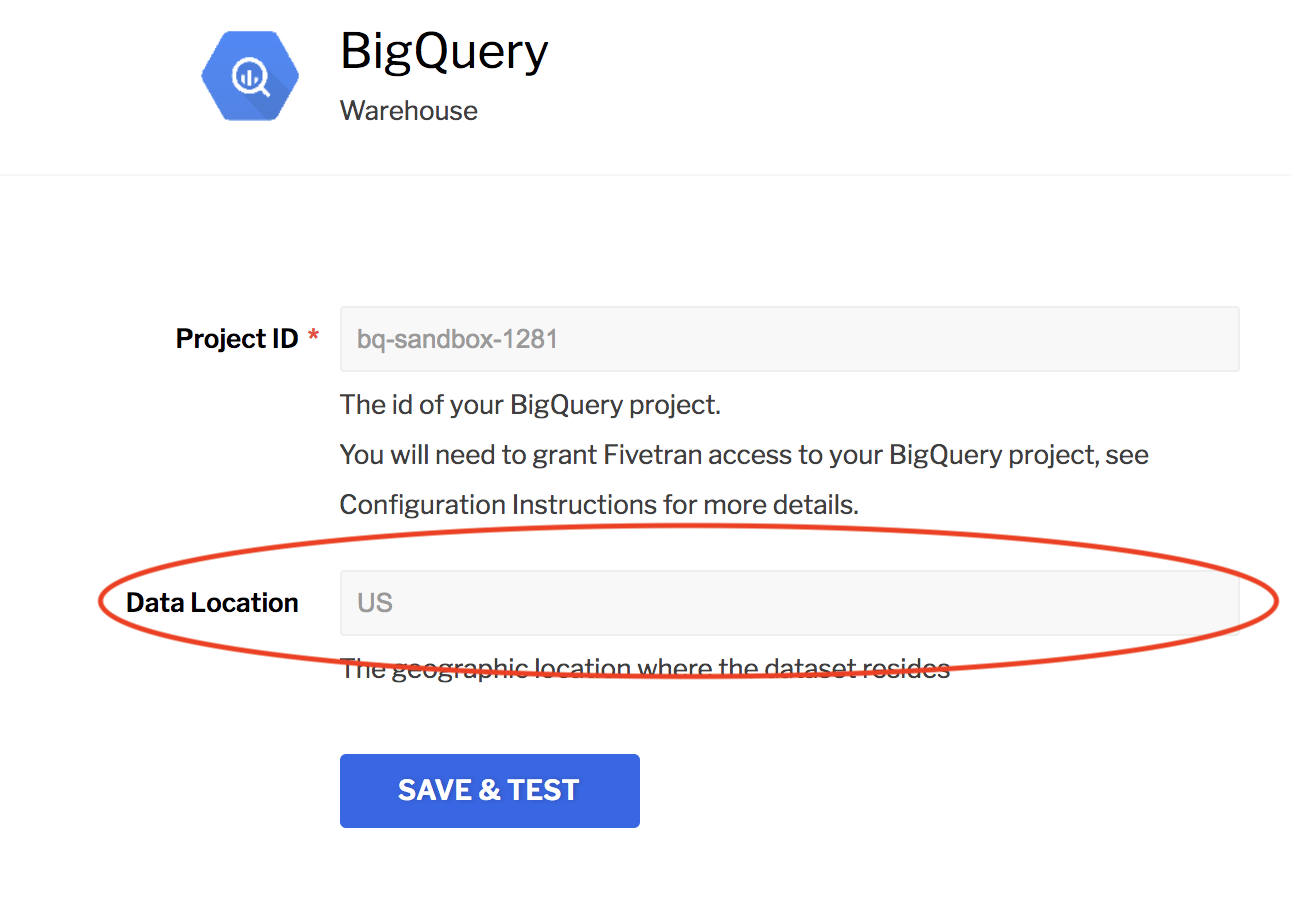

We now support the following regions as BigQuery dataset locations. In the destination setup form, you can select your data location.

| Region Name | Region Description |

|---|---|

| us-east1 | South Carolina |

| us-central1 | Iowa |

| us-west1 | Oregon |

| us-west3 | Salt Lake City |

| us-west4 | Las Vegas |

| europe-central2 | Warsaw |

| europe-west4 | Netherlands |

| asia-south2 | Delhi |

| asia-northeast3 | Seoul |

| australia-southeast2 | Melbourne |

May 2021

We now support the europe-west1 region as a BigQuery dataset location. In the destination setup form, select EUROPE_WEST_1 as your data location.

| Region Name | Region Description |

|---|---|

| europe-west1 | Belgium |

We have added support for the following scenarios as part of the JSON flattening feature:

If a JSON column is null, or has an empty array, or is an empty object, we don’t create the column in your destination. For example, if we receive a JSON structure, such as

{"json_col":{"num":1, "arr":[]}} or {"json_col":{"num":1, "arr":{}}} or {"json_col":{"num":1, "arr":null}}, then we don't create thejson_col.arrcolumn in the BigQuery destination.BigQuery doesn't support NULL values in an array. If null values are present in an array, we convert the ARRAY type to STRING type. For example, if we receive null values in an array, such as

[1, 2, 3, null]or["name_1", "name_2", "null", null], then we write the data as STRING"[1, 2, 3, null]"or"[\"name_1\", \"name_2\", \"null\", null]to the BigQuery destination.BigQuery supports arrays, for example,

[{"name":"name_1", "nums":[1, 2, 3]}]or[{"name":"name_2", "nums":[11, 22, 33]}], but doesn't support nested arrays (arrays of arrays), such as[[1, 2, 3], [4, 5, 6]]. We convert the nested inner arrays to STRING["[1, 2, 3]", "[4, 5, 6]"], and then write the data to the BigQuery destination.

March 2021

We no longer enforce the column names to be in lower case. We now ignore the case of the column names in your destination tables. Your queries and scripts will continue to execute because BigQuery is case-insensitive.

If you have previously synced a column name with upper case letters to your destination table and if a column data type changes in the table, we will rename all the columns in the table to lower case.

We now support JSON flattening. This feature is not enabled by default because we don't support automatic data type promotion from STRING to JSON. See our documentation for more information. If you'd like to enable the feature on your destination, contact our support team. After we enable the feature, you must use your connector's dashboard to drop and re-sync the tables. JSON columns consume more computation resources, and you may observe longer sync durations for the tables.

We now support tables that are partitioned based on a DATETIME column.

We only support time partitioning based on daily (DAY) granularity.

December 2020

We no longer encode STRING data types that are primary keys and have emojis. Previously, we used Base64 encoding to transform STRING types if they were primary keys and had values in the Unicode range D800-DFFF. New connections created after December 5, 2020, will support this new functionality by default.

October 2020

We now support clustering for partitioned and non-partitioned tables. See our clustered tables documentation for more information.

We now support partitioning for update queries. Now, during an update operation, if your partition key is based on the destination primary key, we scan only the corresponding partitions instead of scanning the full table. We are gradually rolling out this new feature to all existing destinations. If you'd like to enable this feature on your destination, contact our support team.

September 2020

We have added a new connector setup test to validate if billing has been enabled for the BigQuery project.

August 2020

We now support tables that are partitioned based on INTEGER column. See our partitioned tables documentation for more details.

July 2020

For partitioned tables, we ignore the partition and scan the whole table in the following scenarios:

- If you have selected a column that is not a primary key column

- If you have created an ingestion partitioned table

For example, we ignore the partition if the column is _fivetran_synced or ingestion time partitioned and scan the whole table while executing merge or delete queries to ensure data integrity.

If there is no DATE or INSTANT column that is part of the primary key in your partitioned table, we scan the whole table in every sync in order to remove duplicate records.

We recommend you to select a partition column that is part of the primary key so we can scan only the corresponding partition.

We have added a task to your Fivetran dashboard that informs you that your API rate limit has exceeded, causing the syncs to fail. Contact BigQuery support to increase your quota limit.

June 2020

We now replicate empty tables in a PostgreSQL source database as empty tables in the destination.

March 2020

We are continuing to migrate BigQuery destinations to unique service accounts.

Starting on March 3, 2020, we are restricting the usage of the fixed service accounts fivetran-client-writer@digital-arbor-400.iam.gserviceaccount.com and managedcustomerwriter@digital-arbor-400.iam.gserviceaccount.com.

The destinations that still use these service accounts will be broken, and the syncs and setup tests will fail.

To repair your destination, you must migrate it to a unique service account. Go to the Destination section of your dashboard, click Save & Test, and follow the instructions that appear in the pop-up window.

We have added support for the DATE data type.

We now convert LOCALDATE data type to DATE data type in the destination. Previously, we converted LOCALDATE data type to TIMESTAMP data type.

As of March 5, 2020, this functionality is enabled by default for new connectors.

This functionality change will not impact connectors configured before March 5, 2020. We will continue to convert LocalDate type to TIMESTAMP type.

January 2020

We have added support for BigQuery projects that are access-limited by a GCP VPC service perimeter. A customer-provided Google Cloud Storage bucket is required to ingest data into such a BigQuery project. For step-by-step instructions about how to setup a bucket with your BigQuery destination, follow our BigQuery destination setup instructions.

We are changing the way we access BigQuery destinations. The new design uses a unique service account for each destination instead of a fixed service account. The destinations that still use the fixed service account must migrate to a unique service account before March 1, 2020. These destinations will have a migration task in the Alerts section of the dashboard explaining how to perform the migration.

September 2019

Our BigQuery destination now supports tables partitioned by ingestion time. If you want to use tables partitioned by ingestion time, learn how in our partitioned table documentation.

July 2019

Newly created destinations will use a unique service account to access data instead of a fixed service account. See our updated setup instructions.

For existing destinations we provide a way to perform migration to unique service accounts. Whenever you save a transformation or destination configuration, a popup window will appear that will guide you through the migration process.

We have added all the regional locations allowed in BigQuery to be data location. Now you can choose any location among them to be your data location. The data location you choose will be the same location where the query processing occurs. The new locations allowed are:

Americas

| Region Name | Region Description |

|---|---|

| us-west2 | Los Angeles |

| northamerica-northeast1 | Montréal |

| us-east4 | Northern Virginia |

| southamerica-east1 | São Paulo |

Europe

| Region Name | Region Description |

|---|---|

| europe-north1 | Finland |

| europe-west2 | London |

| europe-west6 | Zürich |

Asia Pacific

| Region Name | Region Description |

|---|---|

| asia-east2 | Hong Kong |

| asia-south1 | Mumbai |

| asia-northeast2 | Osaka |

| asia-east1 | Taiwan |

| asia-northeast1 | Tokyo |

| asia-southeast1 | Singapore |

| australia-southeast1 | Sydney |

May 2019

Fivetran will sync values in the Unicode range D800-DFFF as BASE64 encoded strings if the column is a primary key column.

Because these values are synced as �� in the BigQuery destination, conflict occurs when these values are present for a primary key column as there will be multiple rows with same primary key ��.

According to Google's documentation, Unicode range D800-DFFF is not allowed in identifiers, because these are surrogate unicode values.

December 2018

We now import BIGDECIMAL values to the BigQuery destination as NUMERIC data types.

October 2018

We’ve made two major updates to the way we load data into BigQuery:

We are now using the interactive priority method for our queries instead of the batch priority method. Despite being designed for Fivetran’s batch update use-case, the BigQuery team has informed us that the batch priority method simply queued up our jobs without any actual improvement in the write performance.

We have also re-enabled BigQuery's SQL tool to use the ‘MERGE’ function. This reduces the data manipulation language (DML) statements per load from two to one.

As a result of these updates, the data-writing process is more than five times faster than it was previously.

April 2018

We stage incoming BigQuery data in a special schema, which is configured to automatically delete its contents after seven days. For users with large data volumes, the storage cost for these tables for seven days can be significant, so we now drop these tables immediately. Note that if there are errors during the loading process, tables may still occasionally take seven days to be dropped.

We have renamed the staging schema from _fivetran_staging to fivetran_schema. Schemas whose names start with _ are invisible in BigQuery, which meant that users weren't able to view this schema to change their permissions.

You can now set your preferred dataset location to EU in the BigQuery setup wizard. Once the location is set, Fivetran localizes all datasets we create.