Managed Data Lake Service

Our Managed Data Lake Service provides a flexible and open approach to storing and managing data in your data lake. It leverages open standards, formats, and interfaces, ensuring compatibility across different ecosystems. The service writes data as Parquet files and maintains metadata for both Iceberg and Delta Lake tables simultaneously, allowing you to use the format that best suits your workflows without committing to a single table type.

By default, our service manages metadata through the Fivetran Iceberg REST Catalog that is accessible to any Iceberg REST Catalog client. You can also configure the service to update other catalog services, ensuring consistent data governance across different environments.

Fivetran streamlines the setup process by enabling you to configure multiple catalogs from a single interface. This flexibility lets you choose from various catalog and query engine options, minimizing vendor lock-in and enabling you to work with the tools that best suit your needs.

For more information about how catalogs work with Managed Data Lake Service and the supported catalogs, see our Catalogs documentation.

Supported storage providers

Managed Data Lake Service supports data lakes built using the following storage providers:

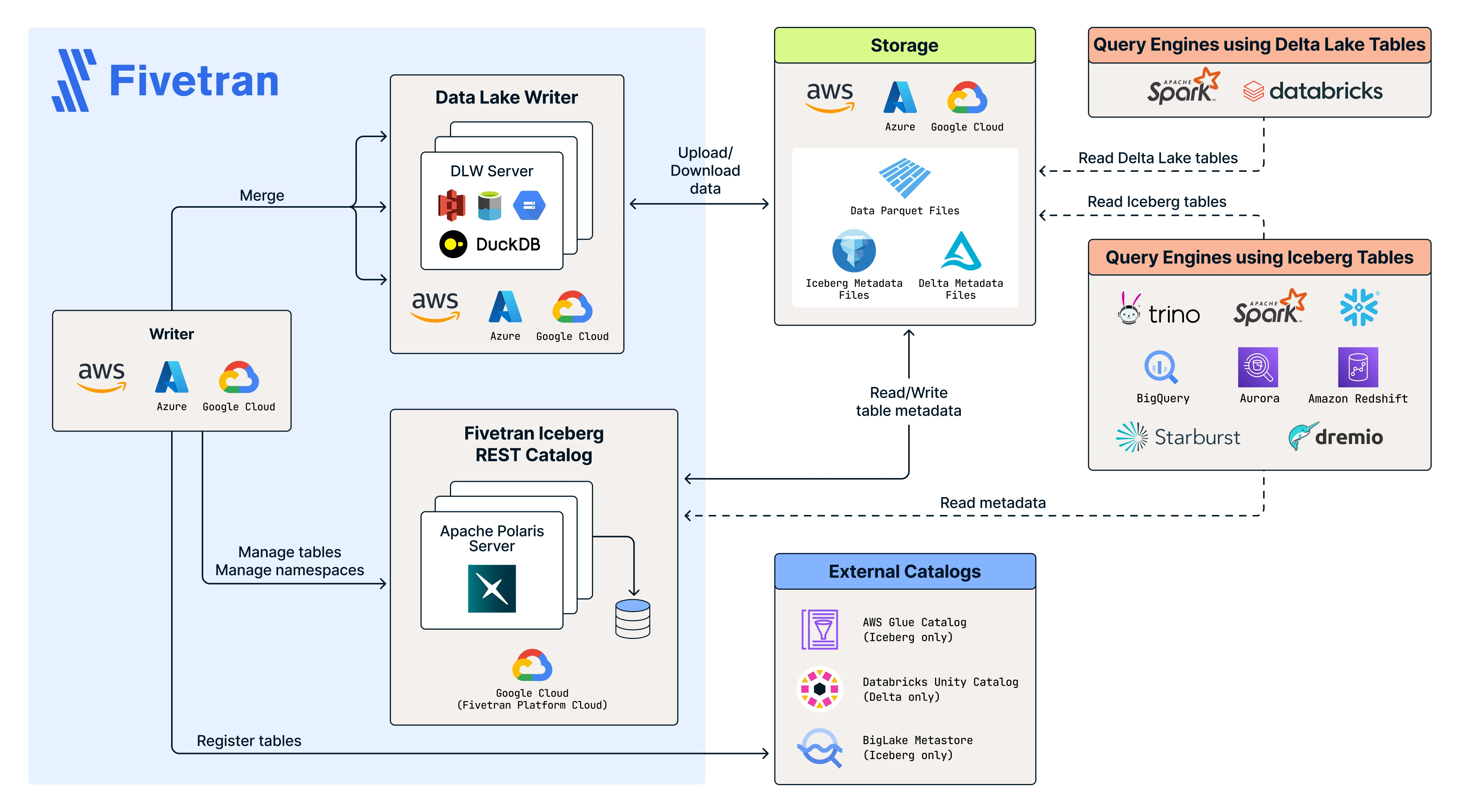

Architecture

The following diagram outlines the high-level architecture of Managed Data Lake Service, including the role of the Fivetran Iceberg REST Catalog:

Supported query engines

You can use various query engines to retrieve data from your data lake. The following table provides examples of query engines you can use for each data lake:

| Query Engine | AWS | ADLS | GCS |

|---|---|---|---|

| Amazon Athena | |||

| Apache Spark | |||

| Azure Synapse Analytics | |||

| Bauplan | |||

| BigQuery | |||

| Databricks | |||

| DuckDB | |||

| Dremio | |||

| Redshift | |||

| Snowflake | |||

| Starburst Galaxy |

For more information about the query engines you can use, see Apache Iceberg and Delta Lake documentation. If you fail to retrieve your data using the query engine of your choice, contact our support team.

Data and table formats

Fivetran organizes your data in a structured format within the data lake. We convert your source data into Parquet files within the Fivetran pipeline and store them in designated tables in your data lake. We support Delta Lake and Iceberg table formats for managing these tables in the data lake.

Delta table protocol versions supported by Fivetran

Fivetran supports Delta tables with the following protocol versions:

- minReaderVersion: 1

- minWriterVersion: 2

Fivetran does not support Delta tables that use higher protocol versions.

Recommended Workaround

Avoid enabling Delta Lake features that exceed the supported reader and writer versions of the table protocol. If a table already uses unsupported versions, recreate the table with compatible versions or publish the data to a consumer-safe Delta table that meets the supported protocol requirements.

Do not access the Parquet files directly from your data lake. Instead, always use the table metadata to read or query the data.

Storage directory

We store your data in the <root>/<prefix_path>/<schema_name>/<table_name> directory of your data lake.

Table maintenance operations

To maintain an efficient and optimized data storage environment, Fivetran performs regular maintenance operations on your data lake tables. These operations are designed to manage storage consumption and enhance query performance for your data lake.

The maintenance operations we perform are as follows:

- Deletion of old snapshots and removed files: We delete the table snapshots that are older than the Snapshot Retention Period you specify in the destination setup form. For Delta tables, we always retain the last 4 checkpoints of a table before deleting its snapshots. In addition to the table snapshots, we also delete the files that are not referenced in the latest table snapshots but were referenced in the older snapshots. These removed files contribute to your storage provider subscription costs. We identify such files that are older than the snapshot retention period and delete them. This cleanup process runs once daily.

- Deletion of previous versions of metadata files: In addition to the current version, we retain 3 previous versions of the metadata files and delete all the prior versions.

- Staging and deletion of orphan files: Orphan files are created in your storage because of unsuccessful operations within your data pipeline. These files are no longer referenced in any table metadata and contribute to your storage provider subscription costs. We identify orphan files and move them to a separate folder named

orphansinside the table directory every alternate Saturday. Orphan data files are moved to theorphans/datafolder, and orphan metadata files are moved to theorphans/metadatafolder. In each subsequent run, we permanently delete the orphan files from storage that were moved during the previous run.

Do not modify or delete the orphans folder or its contents under any circumstances. Deleting or modifying the folder or its contents can permanently damage your tables. Fivetran manages this folder through automated maintenance operations.

To ensure that every table is queryable, we recommend against deleting any metadata files, as such deletions can corrupt the Iceberg tables.

Column statistics

Fivetran updates two column-level statistics, minimum value and maximum value, for your data lake tables. We update column-level statistics to enhance query performance and optimize storage in your data lake.

- If the table contains 200 or less columns, we update the statistics for all the columns.

- If the table contains more than 200 columns, we update the statistics for the

_fivetran_syncedcolumn and all primary keys. If history mode is enabled for the table, we also update the statistics for the_fivetran_active,_fivetran_end, and_fivetran_startcolumns.

Reserved column names

The Iceberg table format does not allow columns with the following names:

_deleted_file_partition_pos_spec_idfile_pathposrow

To avoid the reserved column names, Fivetran prefixes these reserved column names with a hash symbol (#) before writing them to the Iceberg tables.

For more information about Iceberg's reserved field names, see Iceberg documentation.

Type transformation and mapping

The data types in your data lake follow Fivetran's standard data type storage.

We use the following data type conversions:

| FIVETRAN DATA TYPE | DESTINATION DATA TYPE (ICEBERG TABLE FORMAT) | DESTINATION DATA TYPE (DELTA LAKE TABLE FORMAT) | Notes |

|---|---|---|---|

| BOOLEAN | BOOLEAN | BOOLEAN | |

| INT | INTEGER | INTEGER | |

| LONG | LONG | LONG | |

| BIGDECIMAL | DECIMAL(38, 10), DOUBLE, or STRING | DECIMAL(38, 10), DOUBLE, or STRING |

|

| FLOAT | FLOAT | FLOAT | |

| DOUBLE | DOUBLE | DOUBLE | |

| LOCALDATE | DATE | DATE | |

| INSTANT | TIMESTAMPTZ | TIMESTAMP | |

| LOCALDATETIME | TIMESTAMP | TIMESTAMP | |

| STRING | STRING | STRING | |

| BINARY | BINARY | BINARY | |

| SHORT | INTEGER | INTEGER | |

| JSON | STRING | STRING | |

| XML | STRING | STRING |

Supported regions

Supported AWS Regions

For AWS data lakes, we support S3 buckets located in the following AWS Regions:

| Region | Code |

|---|---|

| US East (N. Virginia) | us-east-1 |

| US East (Ohio) | us-east-2 |

| US West (Oregon) | us-west-2 |

| AWS GovCloud (US-West) | us-gov-west-1 |

| Europe (Frankfurt) | eu-central-1 |

| Europe (Ireland) | eu-west-1 |

| Asia Pacific (Mumbai) | ap-south-1 |

| Asia Pacific (Singapore) | ap-southeast-1 |

| Canada (Central) | ca-central-1 |

| Europe (London) | eu-west-2 |

| Asia Pacific (Sydney) | ap-southeast-2 |

| Asia Pacific (Tokyo) | ap-northeast-1 |

Supported Azure regions

For Azure data lakes, we support ADLS containers located in the following Azure regions:

| Region | Code |

|---|---|

| East US 2 | eastus2 |

| Central US | centralus |

| East US | eastus |

| West US 3 | westus3 |

| Australia East | australiaeast |

| UK South | uksouth |

| West Europe | westeurope |

| Germany West Central | germanywestcentral |

| Canada Central | canadacentral |

| UAE North | uaenorth |

| Southeast Asia | southeastasia |

| Japan East | japaneast |

| Central India | centralindia |

Supported Google Cloud regions

For GCS data lakes, we support Cloud Storage buckets in all Google Cloud regions.

To minimize data transfer costs from your cloud storage provider, we recommend selecting a Data processing location in the same region as your data lake during setup.

Supported deployment models

We support the SaaS Deployment model for the destination.

Setup guide

Follow our step-by-step Managed Data Lake Service setup guide to connect your data lake with Fivetran.

Limitations

Common limitations for all storage providers

Fivetran does not support position deletes for Iceberg tables. To avoid errors, we recommend that you avoid running any query that generates position deletes.

Fivetran does not support the Change Data Feed feature for Delta tables. You must not enable Change Data Feed for the Delta tables that Fivetran creates in your data lake.

Managed Data Lake Service does not support Transformations.

Limitations for AWS data lakes

Fivetran does not support the following storage tiers due to their long retrieval times (ranging from a few minutes to 48 hours):

- S3 Glacier Flexible Retrieval

- S3 Glacier Deep Archive

- S3 Intelligent-Tiering Archive Access tier

- S3 Intelligent-Tiering Deep Archive Access tier

AWS Glue Catalog supports only one table per combination of schema and table names within a Region. Consequently, using multiple Fivetran Platform Connectors with the same Glue Catalog across different AWS data lakes can cause conflicts and result in various integration issues. To avoid such conflicts, we recommend configuring Fivetran Platform Connectors with distinct schema names for each AWS data lake.

Limitations for Azure data lakes

Fivetran creates DECIMAL columns with maximum precision and scale (38, 10).

Spark SQL pool queries cannot read the maximum values of DOUBLE and FLOAT data types.

Fivetran does not support

archive access tierbecause its retrieval time can extend to several hours.Spark SQL pool queries truncate the TIMESTAMP values to seconds. To query any table using a TIMESTAMP column, you can use the

unixtime(unix_timestamp(<col_name>, 'yyyy-MM-dd HH:mm:ss.SSS'),'yyyy-MM-dd HH:mm:ss.ms')clause in your queries to get the accurate values, including milliseconds and microseconds.