Logs Fivetran generates and logs several types of data related to your account and destinations:

Structured log events from connections, dashboard user actions, and Fivetran API calls Account- and destination-related metadata that includes:Role/membership information Data flow Granular consumption information You can use this data for the following purposes:

Monitoring and troubleshooting of connections Tracking your usage Conducting audits You can monitor and process this data in your Fivetran account by using either of the following:

Fivetran Platform Connector is a free connector that delivers your logs and account metadata to a schema in your destination . We automatically add a Fivetran Platform connection to every destination you create. The Fivetran Platform Connector is available on all plans. Learn more in our Fivetran Platform Connector documentation .

The MAR that Fivetran Platform connections generate is free, though you may incur costs in your destination. Learn more in our pricing documentation .

External log services As an alternative to the Fivetran Platform Connector, you can use any of the following external log services :

You can connect one external logging service per destination. Fivetran will write log events for all connections in the destination to the connected service. If there is a logging service that you would like but that is not yet supported, let us know .

The log events are in a standardized JSON format:

{

"event" : <Event name>,

"data" : {

// Event-specific data. This section is optional and varies for each relevant event.

},

"created" : <Event creation timestamp in UTC>,

"connector_type" : <Connector type>,

"connection_id" : <Connection ID>,

"connection_name" : <Connection name>,

"sync_id" : <Sync identifier as UUID>,

"exception_id" : <Fivetran error identifier>

}

Field Description event Event name data Optional object that contains event type-specific data. Its contents vary depending on log event type created Event creation timestamp in UTC connector_type Connector type connection_id Connection ID connection_name Connection name. It is either schema name, schema prefix name, or schema table prefix name sync_id Sync identifier as UUID. This optional field is only present in sync-related events generated by Fivetran connections exception_id Optional field that is only present if Fivetran encountered an unexpected problem

See our Log event details documentation to learn about event-specific data.

Some events may not be defined in the Log event details documentation as they are either connector type-specific or don't have the data object.

Log event list Connector log events Fivetran connectors generate the following events:

Connector Event Name Description alter_table Table columns added to, modified in or dropped from destination table change_schema_config_via_sync Schema configuration updated during a sync connection_failure Failed to establish connection with source system connection_successful Successfully established connection with source system copy_rows Data copied to staging table create_schema Schema created in destination create_table Table created in destination delete_rows Stale rows deleted from main table diagnostic_access_ended Data access was removed because the related Zendesk ticket was resolved or deleted diagnostic_access_expired Data access expired diagnostic_access_granted Data accessed by Fivetran support for diagnostic purposes drop_table Table dropped from destination error Error during data sync extract_summary Summary of data extracted, including the number of API requests for API-based connectors and SQL queries for database connectors. forced_resync_connector Forced re-sync of a connector forced_resync_table Forced re-sync of a table import_progress Rough estimate of import progress info Information during data sync insert_rows Updated rows inserted in main table json_value_too_long A JSON value was too long for your destination and had to be truncated processed_records Number of records read from source system read_end Data reading ended read_start Data reading started records_modified Number of records upserted, updated, or deleted in table within single operation during a sync schema_migration_end Schema migration ended schema_migration_start Schema migration started sync_end Data sync completed sync_start Connection started syncing data sync_stats Current sync metadata. This event is only displayed for a successful sync for the following connectors: update_rows Existing rows in main table updated with new values update_state Connector-specific data you provided warning Warning during data sync write_to_table_end Finished writing records to destination table write_to_table_start Started writing records to destination table

Dashboard activity log events Dashboard activities generate the following events:

REST API call log events REST API calls generate the following specific events:

Audit Trail log events Important user actions and the resulting data modifications generate Audit Trail events.

The three main benefits of the Audit Trail logs are as follows:

Enhanced transparency, accountability, and compliance - they allow users to track important actions and changes, providing a comprehensive history of interactions and data modifications. Bolstered security and fostered trust - they help identify unauthorized access or suspicious activities, ensuring data integrity and demonstrating regulatory compliance. Streamlined troubleshooting and improved operational efficiency - they empower organizations to conduct thorough investigations and enhances overall operational effectiveness. The following table lists all Audit Trail log events along with all of their use cases. Note that some events have multiple use cases, which results in the data object containing use case-specific set of fields. In that case, the use cases are listed in the table under the parent log event. For example, Edit Authentication Generate SCIM Token, and Enable or Disable SCIM are two use cases of the edit_account event.

Transformation dbt jobs, new Quickstart Data Models and External Orchestration jobs (dbt Cloud and Coalesce) generate the following events:

This section describes the life cycle of a connection. It lists the corresponding log event generated at each stage of the connection, or connection-related dashboard activities. This will help you recognize the events in the logs and understand how their ordering relates to the operations of the connection.

The connection-related log events are included in the logs captured by the Fivetran Platform Connector and external logging services .

The connection life cycle stages as are listed in chronological order where possible.

1. Connection creation initialized When you create a connection, Fivetran writes its ID in Fivetran's database and assigns the connection status “New.”

Fivetran writes encrypted credentials in its database.

If you create a connection in your Fivetran dashboard , you need to specify the required properties and authorization credentials.

If you create a connection using the Create a Connection API endpoint , you need to specify the required properties. However, you can omit the authorization credentials and authorize the connection later using the Connect Card .

For this stage, Fivetran generates the following log events:

Event name Description create_connector New connection is created CREATE_CONNECTION New connection is created

2. Connection setup tests run During these tests, Fivetran verifies that credentials such as authentication details, paths, and IDs are correct and valid, and resources are available and accessible.

When you click Save & Test while creating a connection in your Fivetran dashboard , Fivetran runs the setup tests. Also, you can run the setup tests by using the Run Connection Setup Tests endpoint . If the setup tests have succeeded, it means the connection has been successfully created .

For this stage, Fivetran generates the following log events:

3. Connection successfully created After the setup tests have succeeded, Fivetran records the Connection Created user action in the User Actions Log. At this stage, the connection is paused . It does not extract, process, or load any data from the source to the destination.

After the connection has been successfully created, you can trigger the historical sync .

For this stage, Fivetran generates the following log events:

Event name Description connection_successful Successfully established connection with source system connection_failure Failed to establish connection with source system

4. Connection schema changed Connection schema changes include changing the connection schema, tables and table columns.

You change your connection's schema in the following cases:

You can change your schema in the following ways:

In your Fivetran dashboard With the Fivetran REST API using any of the following endpoints: For this stage, Fivetran generates the following log events:

Event name Description change_schema_config Schema configuration updated EDIT_CONNECTION Schema configuration updated

For an un-paused connection, changing the schema will trigger a sync to run. If a sync is already running, Fivetran will cancel the running sync and immediately initiate a new one with the new schema configuration.

5. Sync triggered You need to run the historical sync for the connection to start working as intended. The first historical sync that Fivetran does for a connection is called the initial sync. During the historical sync, we extract and process all the historical data from the selected tables in the source. Periodically, we will load data into the destination.

After a successful historical sync, the connection runs in the incremental sync mode. In this mode, whenever possible, only data that has been modified or added - incremental changes - is extracted, processed, and loaded on schedule. We will reimport tables where it is not possible to only fetch incremental changes. We use cursors to record the history of the syncs.

The connection sync frequency that you set in your Fivetran dashboard or by using the Update a Connection endpoint defines how often the incremental sync is run.

Incremental sync runs on schedule at a set sync frequency only when the connection's sync scheduling type is set to auto in our REST API. Setting the scheduling type to manual effectively disables the schedule. You can trigger a manual sync to sync the connection in this case.

For this stage, Fivetran generates the following log events:

Event name Description Step alter_table Table columns added to, modified in or dropped from destination table Load change_schema_config_via_sync Schema configuration updated during a sync. Updates are done when a new table was created during the sync and the user selected to automatically include new tables in the schema. Process copy_rows Data copied to staging table Load create_schema Schema created in destination Load create_table Table created in destination Load delete_rows Stale rows deleted from main table Load drop_table Table dropped from destination Load insert_rows Updated rows inserted in main table Load json_value_too_long A JSON value was too long for your destination and had to be truncated Process read_end Data reading ended Extract read_start Data reading started Extract records_modified Number of records modified during sync Load schema_migration_end Schema migration ended Load schema_migration_start Schema migration started Load sync_end Data sync completed status field values: SUCCESSFUL, FAILURE, FAILURE_WITH_TASK, and RESCHEDULED Load sync_start Connection started syncing data Extract update_rows Existing rows in main table updated with new values Load write_to_table_end Finished writing records to destination table Load

6. Connection paused/resumed When you have just created a connection , it is paused, which means it does not extract, process, or load data. After you successfully run the setup tests , the connection becomes enabled/resumed. After the successful initial sync , it starts working in incremental sync mode.

You can pause and resume the connection either in your Fivetran dashboard , or by using various Connector endpoints in our API.

For this stage, Fivetran generates the following log events:

Event name Description pause_connector The connection was paused resume_connector The connection was resumed EDIT_CONNECTION The connection was paused or resumed

Resuming a connection will trigger either the initial sync , or an incremental sync, depending on the stage the connection was at when it was paused.

7. Re-sync triggered In some cases you may need to re-run a historical sync to fix a data integrity error. This is called a re-sync . We sync all historical data in the tables and their columns in the source as selected in the connection configuration.

You can trigger a re-sync from your Fivetran dashboard . You can also trigger a re-sync by using the Update a Connection endpoint .

For connections that support table re-sync , you can trigger it either in the dashboard or by using the Re-sync connection table data endpoint .

For this stage, Fivetran generates the following log events:

Event name Description resync_connector Connection's re-sync is triggered resync_table Connection's table re-sync is triggered sync_connection Connection's re-sync triggered

8. Manual sync triggered You can trigger an incremental sync manually without waiting for the scheduled incremental sync .

You can do this either by clicking Sync Now in your Fivetran dashboard or by using the Sync Connector Data endpoint .

For this stage, Fivetran generates the following log events:

Event name Description force_update_connector Trigger manual update for connection sync_connection Connection sync triggered

9. Sync ended A sync can end in one of the following states:

Successful - the sync was completed without issue and data in the destination is up to date. Failure - the sync failed due to a unknown issue. Failure with Error - the sync failed due to a known issue that requires the user to take actions to be fixed. An Error is generated and displayed on the Alerts page in the dashboard. Rescheduled - the sync was unable to complete at this time and will automatically resume syncing when it can complete. This is most commonly caused by hitting API quotas. Canceled - the sync was canceled by the user. When the sync ends, Fivetran generates the following log events:

Event name Explanation sync_endFinal status of sync. sync_statsCurrent sync metadata. This event is only displayed for a successful sync for the following connectors:

If you set the sync scheduling for your connection to manual, you need to manually trigger your syncs after you make this change. If a manually-triggered sync was rescheduled

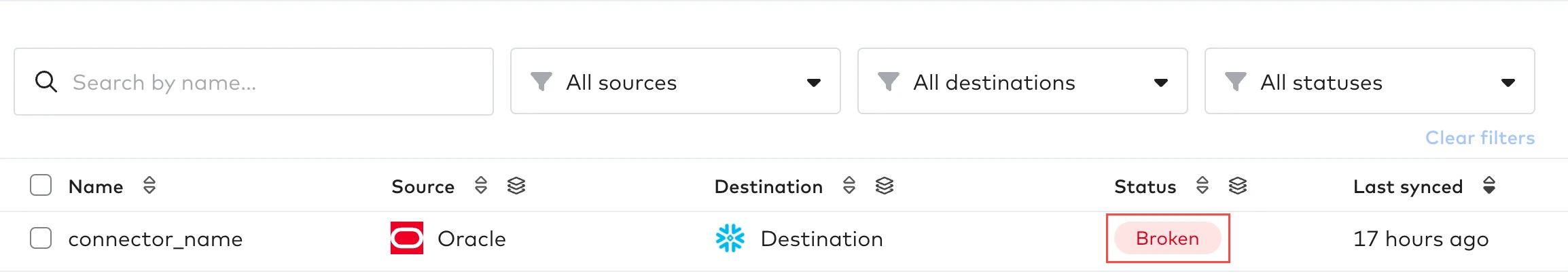

10. Connection broken This is an abnormal state for a connection. It commonly happens due to transient networking or server errors and most often resolves itself with no action on your part.

A connection is considered to be broken when during its sync it fails to either extract, process, or load data.

You can see a connection in the Connection List is broken when it has the red Broken label.

In the Connection dashboard, a broken connection also has the red Broken label.

If we know the breaking issue, we create a corresponding Error and notify you by email with instructions on how to resolve the issue. The Error is displayed on the Alerts page in your Fivetran dashboard . You need to take the actions listed in the Error message to fix the connection. We resend the Error email every seven hours until the Error is resolved.

If the root cause of a connection sync failure is unclear, we display an Unknown Error in your dashboard. If the connection remains in this state for 48 hours, we automatically escalate the issue to our support and engineering teams.

For this stage, Fivetran generates the following log events:

Event name Description sync_end The value of the status field is either FAILURE or FAILURE_WITH_TASK

11. Connection modified You can change the connection credentials, incremental sync frequency, delay notification period and other connector-specific details of a connection. You can modify the connection in your Fivetran dashboard or by using the Update a Connection endpoint .

For this stage, Fivetran generates the following log events:

Event name Description edit_connector Connection's credential, sync period, delay notification period, or data type locking state is edited EDIT_CONNECTION Connection schema, sync frequency, details, delay sensitivity, or status changed

After you have modified and saved your connection, Fivetran automatically runs the setup tests .

12. Connector deleted When you delete a connection, we delete all of its data from Fivetran's database. You can delete connections both in your Fivetran dashboard and by using the Delete a Connection endpoint .

For this stage, Fivetran generates the following log events:

Event name Description delete_connector Connection is deleted DELETE_CONNECTION Connection is deleted

Log event details The following sections provide details for log events that have event-specific data.

alter_table"data" : {

"type" : "ADD_COLUMN" ,

"table" : "competitor_profile_pvo" ,

"properties" : {

"columnName" : "object_version_number" ,

"dataType" : "INTEGER" ,

"byteLength" : null ,

"precision" : null ,

"scale" : null ,

"notNull" : null }

}

fields description type Table change type. ADD_COLUMN- Add new columnDROP_COLUMN - Drop columnALTER_COLUMN - Change columnADD_PRIMARY_KEY - Add primary keyDROP_PRIMARY_KEY - Drop primary key table Table name properties Column properties columnName Column name dataType Column data type byteLength Column value byte length precision Column value precision scale Column value scale notNull Whether column data is NULL

fields description method API method uri Endpoint URI body Request body. Optional

change_schema_config"data" : {

"actor" : "stylist_melodious" ,

"connectionId" : "sql_server_test" ,

"properties" : {

"ENABLED_COLUMNS" : [

{

"schema" : "testSchema" ,

"table" : "testTable2" ,

"columns" : [

"ID"

]

}

] ,

"ENABLED_TABLES" : [

{

"schema" : "testSchema" ,

"tables" : [

"testTable2"

]

}

]

}

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. connectionId Connection ID properties Contains schema change type and the relevant entities DISABLED Array of names of schemas disabled to sync ENABLED Array of names of schemas enabled to sync DISABLED_TABLES Array of names of tables disabled to sync ENABLED_TABLES Array of names of tables enabled to sync DISABLED_COLUMNS Array of names of columns disabled to sync ENABLED_COLUMNS Array of names of columns enabled to sync HASHED_COLUMNS Array of names of hashed columns UNHASHED_COLUMNS Array of names of unhashed columns SYNC_MODE_CHANGE_FOR_TABLES Array of schemas containing tables and columns with updated sync mode tables SyncModes Array of tables with updated sync mode within schema schema Schema name tables Array of table names table Table name columns Array of column names syncMode Updated sync mode. Possible values: Legacy, History

change_schema_config_via_api"data" : {

"actor" : "stylist_melodious" ,

"connectionId" : "purported_substituting" ,

"properties" : {

"DISABLED_TABLES" : [

{

"schema" : "shopify_pbf_schema28" ,

"tables" : [

"price_rule"

]

}

] ,

"ENABLED_TABLES" : [

{

"schema" : "shopify_pbf_schema28" ,

"tables" : [

"order_rule"

]

}

] ,

"includeNewByDefault" : false ,

"ENABLED" : [

"shopify_pbf_schema28"

] ,

"DISABLED" : [

"shopify_pbf_schema30"

] ,

"includeNewColumnsByDefault" : false }

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. connectionId Connection ID properties Contains schema change types and relevant entities DISABLED Array of names of schemas disabled to sync ENABLED Array of names of schemas enabled to sync DISABLED_TABLES Array of names of tables disabled to sync ENABLED_TABLES Array of names of tables enabled to sync schema Schema name tables Array of table names columns Array of column names includeNewByDefault If set to true, all new schemas, tables, and columns are enabled to sync. includeNewColumnsByDefault If set to true, only new columns are enabled to sync.

change_schema_config_via_sync"data" : {

"connectionId" : "documentdb" ,

"properties" : {

"ADDITION" : [

{

"schema" : "docdb_1" ,

"tables" : [

"STRING_table"

]

}

] ,

"REMOVAL" : [

{

"schema" : "docdb_2" ,

"tables" : [

"STRING_table"

]

}

]

}

}

fields description connectionId Connection ID properties Contains schema change types and relevant entities ADDITION Contains schemas and tables enabled to sync REMOVAL Contains schemas and tables disabled to sync schema Schema name tables Array of table names

connection_failure"data" : {

"actor" : "stylist_melodious" ,

"id" : "db2ihva_test5" ,

"testName" : "Connecting to SSH tunnel" ,

"message" : "The ssh key might have changed"

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID testName Name of failed test message Message

connection_successful"data" : {

"actor" : "stylist_melodious" ,

"id" : "db2ihva_test5" ,

"testName" : "DB2i DB accessibility test" ,

"message" : ""

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID testName Name of succeeded test message Message

copy_rows"data" : {

"schema" : "company_bronco_shade_staging" ,

"name" : "facebook_ads_ad_set_attribution_2022_11_03_73te45jo6q34mvjfqfbcwjaea" ,

"destinationName" : "ad_set_attribution" ,

"destinationSchema" : "facebook_ads" ,

"copyType" : "WRITE_TRUNCATE"

}

fields description schema Schema name name Table name destinationName Table name in destination. Optional destinationSchema Schema name in destination. Optional copyType Copy type. Optional

create_connector"data" : {

"actor" : "stylist_melodious" ,

"id" : "db2ihva_test5" ,

"properties" : {

"host" : "111.111.11.11" ,

"password" : "************" ,

"user" : "dbihvatest" ,

"database" : "dbitest1" ,

"port" : 1111 ,

"tunnelHost" : "11.111.111.111" ,

"tunnelPort" : 11 ,

"tunnelUser" : "hvr" ,

"alwaysEncrypted" : true ,

"agentPublicCert" : "..." ,

"agentUser" : "johndoe" ,

"agentPassword" : "************" ,

"agentHost" : "localhost" ,

"agentPort" : 1112 ,

"publicKey" : "..." ,

"parameters" : null ,

"connectionType" : "SshTunnel" ,

"databaseHost" : "111.111.11.11" ,

"databasePassword" : "dbihvatest" ,

"databaseUser" : "dbihvatest" ,

"logJournal" : "VBVJRN" ,

"logJournalSchema" : "DBETEST1" ,

"agentToken" : null ,

"sshHostFromSbft" : null }

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID properties Connector type-specific properties

create_table"data" : {

"schema" : "facebooka_ads" ,

"name" : "account_history" ,

"columns" : {

"schema" : "STRING" ,

"update_id" : "STRING" ,

"_fivetran_synced" : "TIMESTAMP" ,

"rows_updated_or_inserted" : "INTEGER" ,

"update_started" : "TIMESTAMP" ,

"start" : "TIMESTAMP" ,

"progress" : "TIMESTAMP" ,

"id" : "STRING" ,

"message" : "STRING" ,

"done" : "TIMESTAMP" ,

"table" : "STRING" ,

"status" : "STRING"

"primary_key_clause" : "" \"_FIVETRAN_ID\""

}

}

fields description schema Schema name name Table name columns Table columns. Contains table column names and their data types primary_key_clause Column or set of columns forming primary key. Optional

create_warehouse"data" : {

"actor" : "stylist_melodious" ,

"id" : "Warehouse" ,

"properties" : {

"projectId" : "werwe-ert-234567" ,

"dataSetLocation" : "US" ,

"bucket" : null ,

"secretKey" : "************" ,

"secretKeyRemoteExecution" : "************"

}

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Destination ID properties Destination type-specific properties

"data" : {

"id" : "rivalry_occupier" ,

"name" : "core-v2-cron_0_8" ,

"startTime" : "2024-12-18T16:57:41.671Z" ,

"transformationType" : "DBT_CORE" ,

"schedule" : { "type" : "cron" , "entries" : [ "*/3 * * * *" ] } ,

"endTime" : "2024-12-18T16:58:43.323Z" ,

"result" : {

"stepResults" : [

{

"step" : {

"name" : "a_step_1_1" ,

"command" : "dbt run --select +modela" ,

"processBuilderCommand" : null } ,

"success" : false ,

"startTime" : "2024-12-18T16:57:51.671Z" ,

"endTime" : "2024-12-18T16:58:38.323Z" ,

"commandResult" : {

"exitCode" : 1 ,

"output" : "16:57:54 Running with dbt=1.7.3\n16:57:55 Registered adapter: bigquery=1.7.2\n16:57:55 Unable to do partial parsing because saved manifest not found. Starting full parse.\n16:57:56 Found 3 models, 1 source, 0 exposures, 0 metrics, 447 macros, 0 groups, 0 semantic models\n16:57:56 \n16:58:37 Concurrency: 7 threads (target='prod')\n16:58:37 \n16:58:37 1 of 1 START sql view model test_schema.modela .................................. [RUN]\n16:58:37 BigQuery adapter: https://console.cloud.google.com/bigquery\n16:58:37 1 of 1 ERROR creating sql view model test_schema.modela ......................... [ERROR in 0.35s]\n16:58:37 \n16:58:37 Finished running 1 view model in 0 hours 0 minutes and 40.91 seconds (40.91s).\n16:58:37 \n16:58:37 Completed with 1 error and 0 warnings:\n16:58:37 \n16:58:37 Database Error in model modela (models/modela.sql)\n Quota exceeded: Your table exceeded quota for imports or query appends per table. For more information, see https://cloud.google.com/bigquery/docs/troubleshoot-quotas\n compiled Code at target/run/test_schema/models/modela.sql\n16:58:37 \n16:58:37 Done. PASS=0 WARN=0 ERROR=1 SKIP=0 TOTAL=1" ,

"error" : ""

} ,

"error" : null ,

"successfulModelRuns" : 0 ,

"failedModelRuns" : 1 ,

"modelResults" : [

{

"name" : "test_schema.modela" ,

"errorCategory" : "UNCATEGORIZED" ,

"errorData" : null ,

"succeeded" : false }

]

}

] ,

"error" : null ,

"description" : "Steps: successful 0, failed 1"

}

} ,

fields description id Job ID name Job name startTime Job run start time transformationType Job type (DBT_CORE, QUICKSTART, DBT_CLOUD or COALESCE) schedule Job schedule endTime Job run end time result Result details stepResults Step results step Step details name Step name command Step command for DBT_CORE processBuilderCommand Step command for QUICKSTART success Boolean specifying whether step was successful startTime Step run start time endTime Step run end time commandResult Command run result details exitCode Command exit code output Command output error Command execution errors error An exception message that occurred during step execution successfulModelRuns Number of successful model runs failedModelRuns Number of successful model runs modelResults Model run results name Model name errorCategory Model error category errorData Model error data succeeded Boolean specifying whether model run was successful error Error message if the job failed outside of step execution description Step description

"data" : {

"id" : "rivalry_occupier" ,

"name" : "core-v2-cron_0_8" ,

"startTime" : "2024-12-19T02:28:40.122Z" ,

"transformationType" : "DBT_CORE" ,

"schedule" : { "type" : "cron" , "entries" : [ "*/3 * * * *" ] }

} ,

fields description id Job ID name Job name startTime Job run start time transformationType Job type (DBT_CORE, QUICKSTART, DBT_CLOUD or COALESCE) schedule Job schedule

"data" : {

"id" : "rivalry_occupier" ,

"name" : "core-v2-cron_0_8" ,

"startTime" : "2024-12-18T16:42:38.557Z" ,

"transformationType" : "DBT_CORE" ,

"schedule" : { "type" : "cron" , "entries" : [ "*/3 * * * *" ] } ,

"endTime" : "2024-12-18T16:43:41.933Z" ,

"result" : {

"stepResults" : [

{

"step" : {

"name" : "a_step_1_1" ,

"command" : "dbt run --select +modela" ,

"processBuilderCommand" : null } ,

"success" : true ,

"startTime" : "2024-12-18T16:42:48.557Z" ,

"endTime" : "2024-12-18T16:43:36.933Z" ,

"commandResult" : {

"exitCode" : 0 ,

"output" : "16:42:51 Running with dbt=1.7.3\n16:42:52 Registered adapter: bigquery=1.7.2\n16:42:52 Unable to do partial parsing because saved manifest not found. Starting full parse.\n16:42:53 Found 3 models, 1 source, 0 exposures, 0 metrics, 447 macros, 0 groups, 0 semantic models\n16:42:53 \n16:43:35 Concurrency: 7 threads (target='prod')\n16:43:35 \n16:43:35 1 of 1 START sql view model test_schema.modela .................................. [RUN]\n16:43:36 1 of 1 OK created sql view model test_schema.modela ............................. [CREATE VIEW (0 processed) in 0.83s]\n16:43:36 \n16:43:36 Finished running 1 view model in 0 hours 0 minutes and 42.27 seconds (42.27s).\n16:43:36 \n16:43:36 Completed successfully\n16:43:36 \n16:43:36 Done. PASS=1 WARN=0 ERROR=0 SKIP=0 TOTAL=1" ,

"error" : ""

} ,

"error" : null ,

"successfulModelRuns" : 1 ,

"failedModelRuns" : 0 ,

"modelResults" : [

{

"name" : "test_schema.modela" ,

"errorCategory" : null ,

"errorData" : null ,

"succeeded" : true }

]

}

] ,

"error" : null ,

"description" : "Steps: successful 1, failed 0"

}

} ,

fields description id Job ID name Job name startTime Job run start time transformationType Job type (DBT_CORE, QUICKSTART, DBT_CLOUD OR COALESCE) schedule Job schedule endTime Job run end time result Result details stepResults Step results step Step details name Step name command Step command for DBT_CORE processBuilderCommand Step command for QUICKSTART success Boolean specifying whether step was successful startTime Step run start time endTime Step run end time commandResult Command run result details exitCode Command exit code output Command output error Command execution errors error An exception message that occurred during step execution successfulModelRuns Number of successful model runs failedModelRuns Number of successful model runs modelResults Model run results name Model name errorCategory Model error category errorData Model error data succeeded Boolean specifying whether model run was successful error Error message if the job failed outside of step execution description Step description

delete_connector"data" : {

"actor" : "stylist_melodious" ,

"id" : "hva_main_metrics_test_qe_benchmark"

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID

delete_rows"data" : {

"schema" : "company_bronco_shade_staging" ,

"name" : "facebook_ads_ad_set_attribution_2022_11_03_73er44io6q0m1dfgjfbcghjea" ,

"deleteCondition" : "`#existing`.`ad_set_id` = `#scratch`.`ad_set_id` AND `#existing`.`ad_set_updated_time` = `#scratch`.`ad_set_updated_time`"

}

fields description schema Schema name name Table name deleteCondition Delete condition

delete_warehouse"data" : {

"actor" : "stylist_melodious" ,

"id" : "Warehouse"

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Destination ID

diagnostic_access_approved"data" : {

"message" : "Data access granted for 21 days." ,

"ticketId" : "123456" ,

"destinationName" : "destination" ,

"connectionName" : "facebook_ads" ,

"actor" : "actor"

}

fields description message Diagnostic data access message ticketId Zendesk support ticket number destinationName Destination name connectionName Connection name actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables.

diagnostic_access_granted"data" : {

"message" : "Data accessed by Fivetran support for diagnostic purposes" ,

"connectionName" : "connector" ,

"destinationName" : "destination" ,

"requester" : "requester-name" ,

"supportTicket" : "1234"

}

fields description message Diagnostic data access message connectionName Connection name destinationName Destination name requester Requester name as specified in Zendesk supportTicket Zendesk support ticket number

drop_table"data" : {

"schema" : "company_bronco_shade_staging" ,

"name" : "facebook_ads_company_audit_2022_11_03_puqdfgi35r6e1odfgy36rdfgv"

}

fields description schema Schema name name Table name reason Reason why table was dropped. Optional

edit_connector"data" : {

"actor" : "stylist_melodious" ,

"editType" : "CREDENTIALS" ,

"id" : "db2ihva_test5" ,

"properties" : {

"host" : "111.111.11.11" ,

"password" : "************" ,

"user" : "dbihvatest" ,

"database" : "dbitest1" ,

"port" : 1111 ,

"tunnelHost" : "11.111.111.111" ,

"tunnelPort" : 11 ,

"tunnelUser" : "hvr" ,

"alwaysEncrypted" : true ,

"agentPublicCert" : "..." ,

"agentUser" : "johndoe" ,

"agentPassword" : "************" ,

"agentHost" : "localhost" ,

"agentPort" : 1111 ,

"publicKey" : "..." ,

"parameters" : null ,

"connectionType" : "SshTunnel" ,

"databaseHost" : "111.111.11.12" ,

"databasePassword" : "dbihvatest" ,

"databaseUser" : "dbihvatest" ,

"logJournal" : "SDSJRN" ,

"logJournalSchema" : "SFGTEST1" ,

"agentToken" : null ,

"sshHostFromSbft" : null } ,

"oldProperties" : {

"host" : "111.111.11.11" ,

"password" : "************" ,

"user" : "dbihvatest" ,

"database" : "dbitest1" ,

"port" : 1111 ,

"tunnelHost" : "11.111.111.111" ,

"tunnelPort" : 12 ,

"tunnelUser" : "hvr" ,

"alwaysEncrypted" : true ,

"agentPublicCert" : "..." ,

"agentUser" : "johndoe" ,

"agentPassword" : "************" ,

"agentHost" : "localhost" ,

"agentPort" : 1111 ,

"publicKey" : "..." ,

"parameters" : null ,

"connectionType" : "SshTunnel" ,

"databaseHost" : "111.111.11.12" ,

"databasePassword" : "dbihvatest" ,

"databaseUser" : "dbihvatest" ,

"logJournal" : "SDSJRN" ,

"logJournalSchema" : "SFGTEST1" ,

"agentToken" : null ,

"sshHostFromSbft" : null }

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. editType Edit type. CREDENTIALS - Change of credentialsUPDATE_SCHEDULE_TYPE - Change of schedule typeUPDATE_SCHEMA_STATUS - Change of schema statusDELAY_NOTIFICATION_PERIOD - Change of delay notification periodDELAY_NOTIFICATION - Сhange of delay notificationUPDATE_DATA_SENSITIVITY - Сhange of data sensitivityUPDATE_SETUP_STATE - Change of connection or setup statusUPDATE_TYPE_LOCKING_STATE - Change of data type locking state id Connection ID properties Connector type-specific properties oldProperties Changed connection type-specific properties

The object field of the extract_summary log event is not available for all connectors. If you need a specific connector to support this log event, submit a feature request .

"data" : {

"status" : "SUCCESS" ,

"total_queries" : 984 ,

"total_rows" : 3746 ,

"total_size" : 8098757 ,

"rounded_total_size" : "7 MB" ,

"objects" : [

{

"name" : "https://aggregated_endpoint_a" ,

"queries" : 562

} ,

{

"name" : "https://aggregated_endpoint_b" ,

"queries" : 78

} ,

{

"name" : "https://aggregated_endpoint_c" ,

"queries" : 344

}

]

}

fields desciption status The overall status of the query operation. Possible values: SUCCESS, FAIL total_queries Total count of API calls total_rows Total number of rows extracted total_size Total size of the data extracted in bytes rounded_total_size A human-readable format of the total size. The value is rounded down to the nearest unit. For example, if the size is 3430KB, the number is rounded to display 3MB. The same logic applies to round to KB or GB, whatever the nearest unit is objects An array of objects. Each object contains an aggregation template designed to consolidate similar API calls into a single count name The URL or identifier of the endpoint queries The number of API calls to a specific endpoint

force_update_connector"data" : {

"actor" : "stylist_melodious" ,

"id" : "db2ihva_test5"

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID

forced_resync_connector"data" : {

"reason" : "Credit Card Payment resync" ,

"cause" : "MIGRATION"

}

fields description reason Re-sync reason cause Re-sync cause

forced_resync_table"data" : {

"schema" : "hubspot_test" ,

"table" : "ticket_property_history" ,

"reason" : "Ticket's cursor is older than a day, triggering re-sync for TICKET and its child tables." ,

"cause" : "STRATEGY"

}

fields description schema Schema name table Table name reason Resync reason cause Resync cause

import_progress

"data" : {

"tableProgress" : {

"dbo.orders" : "NOT_STARTED" ,

"dbo.history" : "NOT_STARTED" ,

"dbo.district" : "COMPLETE" ,

"dbo.new_order" : "NOT_STARTED"

}

}

fields description tableProgress Table progress as list of tables with their import status. Valid values for status: COMPLETE - table import is completeNOT_STARTED - table import did not start

info"data" : {

"type" : "extraction_start" ,

"message" : "{currentTable: DBIHVA.JOHNDOE_TEST_DATE, imported: 2, selected: 3}"

}

fields description type Information message type message Information message

insert_rows"data" : {

"schema" : "quickbooks" ,

"name" : "journal_entry_line"

}

fields description schema Schema name name Name of inserted row

pause_connector"data" : {

"actor" : "stylist_melodious" ,

"id" : "db2ihva_test5"

}

"data" : {

"actor" : "_fivetran_system" ,

"id" : "db2ihva_test5" ,

"reason" : "Connector is paused because trial period has ended"

}

fields description actor Actor's internal unique ID or _fivetran_system if connector has been pause automatically. For more details, see sample queries that join the LOG and USER tables. id Connection ID reason If a connection has been paused automatically, this field contains a short description of why this happened

processed_records"data" : {

"table" : "ITEM_PRICES" ,

"recordsCount" : 24

}

fields description table Table name recordsCount Number of processed records

read_end"data" : {

"source" : "be822a.csv.gz"

}

fields description source The connector-specific source of read data

read_start"data" : {

"source" : "incremental update"

}

fields description source The connector-specific source of read data

records_modified"data" : {

"schema" : "facebook_ads" ,

"table" : "company_audit" ,

"operationType" : "REPLACED_OR_INSERTED" ,

"count" : 12

}

fields description schema Schema name table Table name operationType Operation type count Number of operations

resume_connector"data" : {

"actor" : "stylist_melodious" ,

"id" : "db2ihva_test5"

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID

resync_connector"data" : {

"actor" : "stylist_melodious" ,

"id" : "bench_10g"

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID

resync_table"data" : {

"actor" : "stylist_melodious" ,

"id" : "ash_hopper_staging" ,

"schema" : "public" ,

"table" : "big"

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID schema Schema name table Table name

schema_migration_end"data" : {

"migrationStatus" : "SUCCESS"

}

fields description migrationStatus Migration status.

sync_end"data" : {

"status" : "SUCCESSFUL"

}

fields description status Sync status. Valid values: "SUCCESSFUL", "RESCHEDULED", "FAILURE", "FAILURE_WITH_TASK" reason If status is FAILURE, this is the description of the reason why the sync failed. status is FAILURE_WITH_TASK, this is the description of the Error. status is RESCHEDULED, this is the description of the reason why the sync is rescheduled. taskType If status is FAILURE_WITH_TASK or RESCHEDULED, this field displays the type of the Error that caused the failure or rescheduling, respectively (for example, reconnect, update_service_account) rescheduledAt If status is RESCHEDULED, this field displays the scheduled time to resume the sync. The scheduled time depends on the reason it was rescheduled for

sync_statsThe sync_stats event is only generated for a successful sync for the following connectors:

"data" : {

"extract_time_s" : 63 ,

"extract_volume_mb" : 0 ,

"process_time_s" : 21 ,

"process_volume_mb" : 0 ,

"load_time_s" : 34 ,

"load_volume_mb" : 0 ,

"total_time_s" : 129

}

fields description extract_time_s Extract time in seconds extract_volume_mb Extracted data volume in Mb process_time_s Process time in seconds process_volume_mb Processed data volume in Mb load_time_s Load time in seconds load_volume_mb Loaded data volume in Mb total_time_s Total time in seconds

test_connector_connection"data" : {

"actor" : "stylist_melodious" ,

"id" : "db2ihva_test5" ,

"testCount" : 6

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Connection ID testCount Number of tests

update_rows"data" : {

"schema" : "hubspot_johndoe" ,

"name" : "company"

}

fields description schema Schema name name Table name

update_statefields description state Connector-specific data you provide to us as JSON. Supports nested objects

update_warehouse"data" : {

"actor" : "stylist_melodious" ,

"id" : "redshift_tst_1" ,

"properties" : {

"region" : "us-east-2"

} ,

"oldProperties" : {

"region" : "us-east-1"

}

}

fields description actor Actor's internal unique ID. For more details, see sample queries that join the LOG and USER tables. id Destination ID properties Destination type-specific properties oldProperties Changed destination type-specific properties

warningExample 1

"data" : {

"type" : "skip_table" ,

"table" : "api_access_requests" ,

"reason" : "No changed data in named range"

}

Example 2

"data" : {

"type" : "retry_api_call" ,

"message" : "Retrying after 60 seconds. Error : ErrorResponse{msg='Exceeded rate limit for endpoint: /api/export/data.csv, project: 11111 ', code='RateLimitExceeded', params='{}'}"

}

fields description type Warning type table Table name reason Warning reason message Warning message

write_to_table_start"data" : {

"table" : "company_audit"

}

fields description table Table name

write_to_table_end"data" : {

"table" : "company_audit"

}

fields description table Table name

create_connection"data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "CREATE" ,

"timestamp" : "2025-07-07T16:34:20.536752Z" ,

"oldValues" : {

"credentials" : null ,

"schema" : null ,

"service" : null } ,

"newValues" : {

"credentials" : {

"key" : "value" ,

"key2" : "value2"

} ,

"schema" : "schema" ,

"service" : "service"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (CREATE) timestamp Event timestamp oldValues Previous connection details oldValues.credentials Previous credentials (null) oldValues.schema Previous schema (null) oldValues.service Previous service (null) newValues New connection details newValues.credentials New credentials (JSON object, connector-specific key-value pairs) newValues.schema New schema newValues.service New service

create_destination"data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"action" : "CREATE" ,

"timestamp" : "2025-07-07T16:33:16.376908Z" ,

"oldValues" : {

"name" : null ,

"region" : null } ,

"newValues" : {

"name" : "name"

"region" : "region"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (CREATE) timestamp Event timestamp oldValues Previous destination details oldValues.name Previous destination name (null) oldValues.region Previous destination region (null) newValues New destination details newValues.name Destination name newValues.region Destination region

create_team"data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "TEAM" ,

"primaryResourceId" : "team_id" ,

"action" : "CREATE" ,

"timestamp" : "2025-07-07T16:36:14.158294Z" ,

"newValues" : {

"account_role" : "role_name" ,

"team_name" : "team_name"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (TEAM) primaryResourceId Team ID action Action performed (CREATE) timestamp Event timestamp newValues New team details newValues.account_role Team account role newValues.team_name Team name

create_user"data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "ACCOUNT" ,

"primaryResourceId" : "account_id" ,

"secondaryResourceType" : "USER" ,

"secondaryResourceId" : "user_id" ,

"action" : "CREATE" ,

"timestamp" : "2025-07-07T16:27:03.472937Z" ,

"newValues" : {

"user_first_name" : "***" ,

"user_email" : "***" ,

"user_last_name" : "***" ,

"account_role" : "role_name"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (ACCOUNT) primaryResourceId Account ID secondaryResourceType Secondary resource type (USER) secondaryResourceId User ID action Action performed (CREATE) timestamp Event timestamp newValues New user details newValues.user_first_name User's first name newValues.user_email User's email address newValues.user_last_name User's last name newValues.account_role User's account role

delete_connection"data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "DELETE" ,

"timestamp" : "2025-07-07T17:38:55.074275Z"

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (DELETE) timestamp Event timestamp

delete_team"data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "TEAM" ,

"primaryResourceId" : "team_id" ,

"action" : "DELETE" ,

"timestamp" : "2025-07-07T16:26:06.169074Z" ,

"oldValues" : {

"team_id" : "team_id"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (TEAM) primaryResourceId Team ID action Action performed (DELETE) timestamp Event timestamp oldValues Previous team details oldValues.team_id Team ID

delete_user"data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "USER" ,

"primaryResourceId" : "deleted_user_id" ,

"action" : "DELETE" ,

"timestamp" : "2025-07-07T16:31:21.147199Z" ,

"oldValues" : {

"user_id" : "deleted_user_id"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (USER) primaryResourceId User ID of the deleted user action Action performed (DELETE) timestamp Event timestamp oldValues Previous user details oldValues.user_id User ID of the deleted user

edit_accountEdit authentication - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "ACCOUNT" ,

"primaryResourceId" : "account_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T14:26:40.138604Z" ,

"oldValues" : {

"required_auth_type" : "ANY" ,

"saml_user_provisioning" : false ,

"browser_session_timeout" : 0.0 ,

"enable_saml" : false } ,

"newValues" : {

"required_auth_type" : "GOOGLE" ,

"saml_user_provisioning" : true ,

"browser_session_timeout" : 86400.0 ,

"enable_saml" : true }

}

fields description userId User ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (ACCOUNT) primaryResourceId Account ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous authentication settings oldValues.required_auth_type Previous required authentication type. Possible values: ANY, EMAIL, GOOGLE, MICROSOFT, SAML oldValues.saml_user_provisioning Previous SAML user provisioning state (true/false) oldValues.browser_session_timeout Previous browser session timeout (seconds) oldValues.enable_saml Previous SAML enabled state (true/false) newValues New authentication settings newValues.required_auth_type New required authentication type. Possible values: ANY, EMAIL, GOOGLE, MICROSOFT, SAML newValues.saml_user_provisioning New SAML user provisioning state (true/false) newValues.browser_session_timeout New browser session timeout (seconds) newValues.enable_saml New SAML enabled state (true/false)

Generate SCIM token - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "ACCOUNT" ,

"primaryResourceId" : "account_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-08T18:30:23.011120Z" ,

"oldValues" : {

"change" : null ,

"type" : null } ,

"newValues" : {

"change" : "GENERATE" ,

"type" : "scim"

}

}

fields description userId User ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (ACCOUNT) primaryResourceId Account ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous values (change, type) oldValues.change Previous change value (null) oldValues.type Previous type value (null) newValues New values (change, type) newValues.change New change value (GENERATE) newValues.type New type value (scim)

Enable or disable SCIM - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "ACCOUNT" ,

"primaryResourceId" : "account_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T14:25:16.587810Z" ,

"oldValues" : {

"enable_scim" : true } ,

"newValues" : {

"enable_scim" : false }

}

fields description userId User ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (ACCOUNT) primaryResourceId Account ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous connection details newValues Updated connection details enable_scim Boolean defining whether SCIM is enabled

edit_connectionEnable or disable a sync delay notification for a connection - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-22T14:50:49.356184217Z" ,

"oldValues" : {

"delay_notification_enabled" : true } ,

"newValues" : {

"delay_notification_enabled" : false }

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (MODIFY_SCHEMA) timestamp Event timestamp oldValues Previous connection details newValues Updated connection details delay_notification_enabled Boolean defining whether a sync delay notification is enabled or disabled

Modify schema status of a connection - use case "data" : {

"userId" : "somewhere_sensitive" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "unnerving_recoverable" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-22T22:34:44.416147874Z" ,

"oldValues" : {

"schema_status" : "ready"

} ,

"newValues" : {

"schema_status" : "blocked_on_capture"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (MODIFY_SCHEMA) timestamp Event timestamp oldValues Previous connection details newValues Updated connection details schema_status Schema status. Possible values: ready, blocked_on_capture, blocked_on_customer

Modify setup status of a connection - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "SYSTEM" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-22T23:00:07.518267583Z" ,

"oldValues" : {

"setup_status" : "incomplete"

} ,

"newValues" : {

"setup_status" : "connected"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (MODIFY_SCHEMA) timestamp Event timestamp oldValues Previous connection details newValues Updated connection details setup_status Connection setup status. Possible values: incomplete, connected, broken

Modify a schema of a connection - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-21T20:42:54.151014766Z" ,

"oldValues" : {

"tables" : {

"schema.Table" : {

"blocked" : true }

} ,

"schemas" : { } ,

"columns" : {

"schema.Table.Column" : {

"hashed" : false }

}

} ,

"newValues" : {

"tables" : {

"schema.Table" : {

"blocked" : false }

} ,

"schemas" : { } ,

"columns" : {

"schema.Table.Column" : {

"hashed" : true }

}

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (MODIFY_SCHEMA) timestamp Event timestamp oldValues Previous schema/table/column config newValues Updated schema/table/column config schemas List of schemas tables List of tables columns List of columns blocked Boolean specifying whether schema, table or column is blocked from sync hashed Boolean specifying whether column's values are hashed

Modify sync frequency of a connection - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-08T10:07:12.697347Z" ,

"oldValues" : {

"sync_frequency" : 5

} ,

"newValues" : {

"sync_frequency" : 10

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous connection details oldValues.sync_frequency Previous sync frequency newValues Updated connection details newValues.sync_frequency Updated sync frequency

Modify sync delay sensitivity of a connection - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:43:23.883391Z" ,

"oldValues" : {

"delay_sensitivity" : "HIGH"

} ,

"newValues" : {

"delay_sensitivity" : "NORMAL"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous connection details oldValues.delay_sensitivity Previous delay sensitivity value newValues Updated connection details newValues.delay_sensitivity Updated delay sensitivity value

Modify connection delay threshold - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:44:06.548206Z" ,

"oldValues" : {

"delay_sensitivity" : "later"

} ,

"newValues" : {

"delay_sensitivity" : "sooner"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous connection details oldValues.delay_sensitivity Previous delay sensitivity value newValues Updated connection details newValues.delay_sensitivity Updated delay sensitivity value

Modify connection delay threshold (custom) - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-08T10:04:39.531187Z" ,

"oldValues" : {

"delay_sensitivity" : "later"

} ,

"newValues" : {

"delay_sensitivity" : "custom (20m)"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous connection details oldValues.delay_sensitivity Previous delay sensitivity value newValues Updated connection details newValues.delay_sensitivity Updated delay sensitivity value (minutes)

Pause or resume a connection - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T16:35:14.456698Z" ,

"oldValues" : {

"is_paused" : true } ,

"newValues" : {

"is_paused" : false }

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous connection details oldValues.is_paused Previous paused state (true) newValues Updated connection details newValues.is_paused Updated paused state (false)

Migrate schema of a connection - use case {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-08T18:37:14.728973Z" ,

"oldValues" : {

"schema_changes" : null } ,

"newValues" : {

"schema_changes" : {

"phase" : 2 ,

"state" : "approved"

}

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous connection details oldValues.schema_changes Previous schema changes (null) newValues Updated connection details newValues.schema_changes Updated schema changes with phase and state newValues.schema_changes.phase Phase of the schema migration. Possible values: 1, 2 newValues.schema_changes.state State of the schema migration. Possible values: approved, rejected

edit_destinationModify region of a destination - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:27:45.123456Z" ,

"oldValues" : {

"region" : "previous_region"

} ,

"newValues" : {

"region" : "region"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous destination details oldValues.region Previous destination region newValues Updated destination details newValues.region Updated destination region

Modify failover region of a destination - use case "data" : {

"userId" : "user_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:27:45.123456Z" ,

"oldValues" : {

"failover_region" : null } ,

"newValues" : {

"failover_region" : "failover_region_value"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous destination details oldValues.failover_region Previous destination failover region (null) newValues Updated destination details newValues.failover_region Updated destination failover region

Modify destination name - use case "data" : {

"userId" : "userId" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destinationId" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:28:02.629679Z" ,

"oldValues" : {

"name" : "foo"

} ,

"newValues" : {

"name" : "bar"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous destination details oldValues.name Previous destination name newValues Updated destination details newValues.name Updated destination name

Delete a destination - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"action" : "DELETE" ,

"timestamp" : "2025-07-07T17:41:36.683358Z"

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (DELETE) timestamp Event timestamp

Add new external logging service to a destination - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-08T10:08:12.463769Z" ,

"oldValues" : {

"credentials" : null } ,

"newValues" : {

"credentials" : {

"user" : "value" ,

"password" : "*****"

}

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous destination details oldValues.credentials Previous credentials (null) newValues Updated destination details newValues.credentials Updated credentials as list of service-specific key-value pairs. Sensitive information is masked

Update external logging service for a destination - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-08T10:09:10.198792Z" ,

"oldValues" : {

"credentials" : {

"user" : "previous_value" ,

"password" : "*****"

}

} ,

"newValues" : {

"credentials" : {

"user" : "value" ,

"password" : "*****"

}

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous destination details oldValues.credentials Previous credentials as list of service-specific key-value pairs. Sensitive information is masked newValues Updated destination details newValues.credentials Updated credentials as list of service-specific key-value pairs. Sensitive information is masked

Remove external logging service from a destination - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-08T10:12:08.633217Z" ,

"oldValues" : {

"change" : null } ,

"newValues" : {

"change" : "REMOVE"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous destination details oldValues.change Previous change action newValues Updated destination details newValues.change Updated change action (REMOVE)

edit_teamModify an account role assigned to a team - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "TEAM" ,

"primaryResourceId" : "team_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T16:37:50.548466Z" ,

"oldValues" : {

"account_role" : "previous_role_name"

} ,

"newValues" : {

"account_role" : "role_name"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (TEAM) primaryResourceId Team ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous team details oldValues.account_role Previous team account role newValues Updated team details newValues.account_role Updated team account role

Modify a team role for a connection - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"secondaryResourceType" : "TEAM" ,

"secondaryResourceId" : "team_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:33:12.073434Z" ,

"oldValues" : {

"permission" : "previous_role_name"

} ,

"newValues" : {

"permission" : "role_name"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID secondaryResourceType Secondary resource type (TEAM) secondaryResourceId Team ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous team details oldValues.permission Previous team role for connection newValues Updated team details newValues.permission Updated team role for connection

Modify a team role for a destination - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"secondaryResourceType" : "TEAM" ,

"secondaryResourceId" : "team_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:33:12.073434Z" ,

"oldValues" : {

"permission" : "previous_role_name"

} ,

"newValues" : {

"permission" : "role_name"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (DESTINATION) primaryResourceId Destination ID secondaryResourceType Secondary resource type (TEAM) secondaryResourceId Team ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous team details oldValues.permission Previous team role for destination newValues Updated team details newValues.permission Updated team role for destination

Add a user to a team - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "TEAM" ,

"primaryResourceId" : "team_id" ,

"secondaryResourceType" : "USER" ,

"secondaryResourceId" : "user_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T16:27:59.336366Z" ,

"newValues" : {

"change" : "ADD" ,

"new_user_id" : "user_id" ,

"type" : "USER" ,

"team_name" : "team_name"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (TEAM) primaryResourceId Team ID secondaryResourceType Secondary resource type (USER) secondaryResourceId User ID action Action performed (EDIT) timestamp Event timestamp newValues Details of the change newValues.change Type of change (ADD) newValues.new_user_id ID of the user added to the team newValues.type Resource type (USER) newValues.team_name Team name

Remove a user from a team - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "TEAM" ,

"primaryResourceId" : "team_id" ,

"secondaryResourceType" : "USER" ,

"secondaryResourceId" : "user_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:26:12.523825Z" ,

"oldValues" : {

"change" : "REMOVE" ,

"resource_id" : "user_id" ,

"type" : "USER" ,

"team_name" : "team_name"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (TEAM) primaryResourceId Team ID secondaryResourceType Secondary resource type (USER) secondaryResourceId User ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous user-team association details oldValues.change Previous change type (REMOVE) oldValues.resource_id Previous user ID oldValues.type Previous resource type (USER) oldValues.team_name Team name

Assign a team manager - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "TEAM" ,

"primaryResourceId" : "team_id" ,

"secondaryResourceType" : "USER" ,

"secondaryResourceId" : "user_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:37:53.905038Z" ,

"newValues" : {

"user_id" : "user_id" ,

"change" : "ASSIGN_TEAM_MANAGER" ,

"type" : "USER" ,

"team_name" : "team_name"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (TEAM) primaryResourceId Team ID secondaryResourceType Secondary resource type (USER) secondaryResourceId User ID action Action performed (EDIT) timestamp Event timestamp newValues New user details newValues.user_id User ID newValues.change Change type (ASSIGN_TEAM_MANAGER) newValues.type Resource type (USER) newValues.team_name Team name

Unassign a team manager - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "TEAM" ,

"primaryResourceId" : "team_id" ,

"secondaryResourceType" : "USER" ,

"secondaryResourceId" : "user_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:37:05.906698Z" ,

"newValues" : {

"user_id" : "user_id" ,

"change" : "UNASSIGN_TEAM_MANAGER" ,

"type" : "USER" ,

"team_name" : "team_name"

}

}

fields description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (TEAM) primaryResourceId Team ID secondaryResourceType Secondary resource type (USER) secondaryResourceId User ID action Action performed (EDIT) timestamp Event timestamp newValues New user details newValues.user_id User ID newValues.change Change type (UNASSIGN_TEAM_MANAGER) newValues.type Resource type (USER) newValues.team_name Team name

edit_userModify an account role assigned to a user - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "WEB_UI" ,

"primaryResourceType" : "USER" ,

"primaryResourceId" : "user_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:29:50.046679Z" ,

"oldValues" : {

"account_role" : "oldRoleName"

} ,

"newValues" : {

"account_role" : "newRoleName"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (USER) primaryResourceId User ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous user details oldValues.account_role Previous user account role newValues Updated user details newValues.account_role Updated user account role

Modify a user role for a connection - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "CONNECTION" ,

"primaryResourceId" : "connection_id" ,

"secondaryResourceType" : "USER" ,

"secondaryResourceId" : "user_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:33:12.073434Z" ,

"oldValues" : {

"permission" : "previous_role_name"

} ,

"newValues" : {

"permission" : "role_name"

}

}

Field Description userId Actor user ID interactionMethod Interaction environment. Possible values: API, WEB_UI primaryResourceType Resource type (CONNECTION) primaryResourceId Connection ID secondaryResourceType Secondary resource type (USER) secondaryResourceId User ID action Action performed (EDIT) timestamp Event timestamp oldValues Previous user details oldValues.permission Previous user role for connection newValues Updated user details newValues.permission Updated user role for connection

Modify a user role for a destination - use case "data" : {

"userId" : "actor_id" ,

"interactionMethod" : "API" ,

"primaryResourceType" : "DESTINATION" ,

"primaryResourceId" : "destination_id" ,

"secondaryResourceType" : "USER" ,

"secondaryResourceId" : "user_id" ,

"action" : "EDIT" ,

"timestamp" : "2025-07-07T17:33:12.073434Z" ,

"oldValues" : {

"permission" : "previous_role_name"

} ,

"newValues" : {

"permission" : "role_name"

}

}