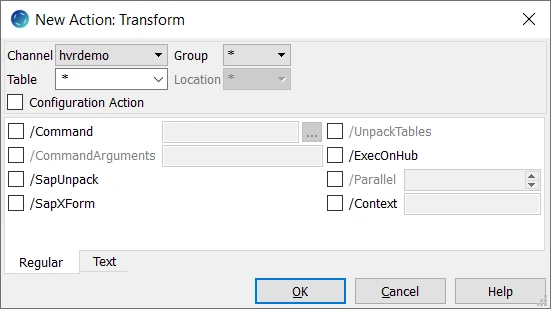

Transform

Description

Action Transform defines a data mapping that is applied inside the HVR pipeline. A transform can either be a command (a script or an executable), or it can built into HVR (such as /SapAugment). These transform happens after the data has been captured from a location and before it is integrated into the target, and is fed all the data in that job's cycle. To change the contents of a file as HVR reads it or to change its contents as HVR writes it, use FileFormat /CaptureConverter or /IntegrateConverter. This Transform action happens between the changes from those converters.

A command transform is fed data in XML format. This is a representation of all the data that passes through HVR's pipeline. A definition is in $HVR_HOME/lib/hvr_private.dtd.

Parameters

This section describes the parameters available for action Transform.

| Parameter | Argument | Description |

|---|---|---|

/Command | path | Name of transform command. This can be a script or an executable. Scripts can be shell scripts on Unix and batch scripts on Windows or can be files beginning with a 'magic line' containing the interpreter for the script e.g. #!perl. A transform command should read from its stdin and write the transformed bytes to stdout. Argument path can be an absolute or a relative pathname. If a relative pathname is supplied the agents should be located in $HVR_HOME/lib/transform. This parameter can either be defined on a specific table or on all tables (*). Defining it on a specific table could be slower because the transform will be stopped and restarted each time the current table name alternates. However, defining it on all tables (*) requires that all data must go through the transform, which could also be slower and costs extra resource (e.g. diskroom for a /Command transform). |

/CommandArguments | userarg | Value(s) of parameter(s) for transform (space separated). |

/SapAugment | Capture job selecting for de-clustering of multi-row SAP cluster tables. This parameter is not supported since HVR version 5.3.1/19. | |

/SapUnpack Since v5.7.5/7 | Unpack the SAP pool, cluster, and long text table (STXL). By defining this parameter, HVR can capture changes from the SAP pool, cluster, and long text tables (binary STXL data) and transform them into unpacked readable data. In case of a HANA source system, unpacking of STXL table (for long text) is possible only during HVR Refresh, it is not possible to unpack during Capture from the database log. The SapUnpack license is required for using this functionality. This parameter:

Additional information about SapUnpack

| |

/SapXForm | Invoke SAP transformation for SAP pool and cluster tables. For more information about SapXForm, see Requirements for SapXForm. This parameter requires Integrate/Burst defined in the channel. This parameter should not be used together with AdaptDDL or Capture /Coalesce. This parameter requires the SAP decluster engine, which is included in HVR distributions since HVR 5.3.1/4. | |

/UnpackTables | Transform will map *_pack tables into *_*_unpack tables. | |

/ExecOnHub | Execute transform on hub instead of location's machine. | |

/Parallel | N | Run transform in N multiple parallel branches. Rows will be distributed using hash of their distribution keys, or round robin if distribution key is not available. Parallelization starts only after first 1000 rows. |

/Context | context | Ignore action unless refresh or comparectx is enabled. The value should be the name of a context (a lowercase identifier). It can also have form !ctx, which means that the action is effective unless context ctx is enabled. One or more contexts can be enabled for HVR Compare or Refresh (on the command line with option –Cctx). Defining an action which is only effective when a context is enabled can have different uses. For example, if action Transform /Command="C:/hvr/script/myscriptfile"/Context=qqq is defined, then during replication no transform will be performed but if a refresh is done with context qqq enabled (option –Cqqq), then the transform will be performed. If a 'context variable' is supplied (using option –Vxxx=val) then this is passed to the transform as environment variable $HVR_VAR_XXX. |

Command Transform Environment

A transform command should read from its stdin and write the transformed bytes to stdout. If a transform command encounters a problem, it should write an error to stderr and return with exit code 1, which will cause the replication jobs to fail. The transform command is called with multiple arguments, which should be defined using parameter /CommandArguments.

A transform inherits the environment from its parent process. On the hub, the parent of the transform's parent process is the HVR Scheduler. On a remote Unix machine, it is the inetd daemon. On a remote Windows machine it is the HVR Remote Listener service. Differences with the environment process are as follows:

- Environment variables $HVR_CHN_NAME and $HVR_LOC_NAME are set.

- Environment variable $HVR_TRANSFORM_MODE is set to either value cap, integ, cmp, refr_read or refr_write.

- Environment variable $HVR_CONTEXTS is defined with a comma–separated list of contexts defined with HVR Refresh or Compare (option –Cctx).

- Environment variables $HVR_VAR_XXX are defined for each context variable supplied to HVR Refresh or Compare (option –Vxxx=val).

- Any variable defined by action Environment is also set in the transform's environment, unless /ExecOnHub is defined.

- The current working directory is $HVR_TMP, or $HVR_CONFIG/tmp if this is not defined.

- stdin is redirected to a socket (HVR writes the original file contents into this), whereas stdout and stderr are redirected to separate temporary files. HVR replaces the contents of the original file with the bytes that the transform writes to its stdout. Anything that the transform writes to its stderr is printed in the job's log file on the hub machine.