Db2 for z/OS Requirements

This section describes the requirements, access privileges, and other features of Fivetran HVR when using 'Db2 for z/OS' for replication.

Supported Platforms

- Learn about the Db2 for z/OS versions compatible with HVR on our Platform Support Matrix page (6.3.5, 6.3.0, 6.2.5, 6.2.0, 6.1.5, and 6.1.0).

Supported Capabilities

- Discover what HVR offers for Db2 for z/OS on our Capabilities for Db2 for z/OS page (6.3.5, 6.3.0, 6.2.5, 6.2.0, 6.1.5, and 6.1.0).

Data Management

Learn how HVR maps data types between source and target DBMSes or file systems on the Data Type Mapping page. For the list of the supported Db2 for z/OS data types and their mapping, see Data Type Mapping for Db2 for z/OS.

Understand the character encodings HVR supports for Db2 for z/OS on the Supported Character Encodings page.

Introduction

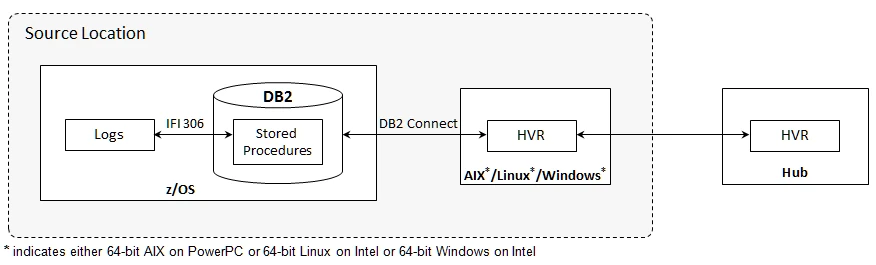

To capture from Db2 for z/OS, HVR needs to be installed on a separate machine(either 64-bit Linux on Intel or 64-bit Windows on Intel or 64-bit AIX on PowerPC) from which HVR will access Db2 on z/OS machine. Additionally, the HVR stored procedures need to be installed on Db2 for z/OS machine for accessing Db2 log files. For steps to install the stored procedures on Db2 for z/OS machine, see section Installing Capture Stored Procedures.

HVR requires 'Db2 Connect' for connecting to Db2 for z/OS.

Prerequisites for Fivetran HVR Machine

HVR requires the IBM Data Server Driver for ODBC and CLI or Db2 client or Db2 server or Db2 Connect to be installed on the machine from which HVR connects to Db2 on z/OS. The Db2 client should have an instance to store the data required for the remote connection.

To install the IBM Data Server Driver for ODBC and CLI, refer to the IBM documentation.

To set up the Db2 client or Db2 server or Db2 Connect, use the following commands to catalog the TCP/IP node and the remote database:

db2 catalog tcpip node nodename remote hostname server portnumber db2 catalog database databasename at node nodename

- nodename is the local nickname for the remote machine that contains the database you want to catalog.

- hostname is the name of the host/node where the target database resides.

- databasename is the name of the database you want to catalog.

For more information about configuring Db2 client or Db2 server or Db2 Connect, refer to IBM documentation.

Verifying Connection to the Db2 Server

To test the connection to the Db2 server on the z/OS machine, use the following command:

When using IBM Data Server Driver for ODBC and CLI

db2cli validate -database databasename:servername:portnumber -connect -user username -passwd passwordor

db2cli validate -dsn dsnname -connect -user username -passwd password

- When using Db2 client or Db2 server or Db2 Connect

db2 connect to databasename user userid